8 Techniques for Coreference Resolution in NLP

Coreference resolution is a crucial NLP task that helps machines understand when different words refer to the same entity in text. Here's a quick rundown of 8 key techniques:

- Rule-based methods

- Machine learning approaches

- Deep learning techniques

- Sieve-based methods

- Holistic mention pair models

- Entity-focused methods

- Linguistic constraints

- Hybrid approaches

These techniques have significantly improved NLP accuracy. For example, coreference resolution boosted fake news detection accuracy by 9.42% in one study.

Quick Comparison:

| Method | Pros | Cons |

|---|---|---|

| Rule-based | Fast, no training needed | Less accurate, rigid rules |

| Machine learning | Learns from data, adaptable | Requires labeled datasets |

| Deep learning | High accuracy, context understanding | Computationally intensive |

| Sieve-based | High precision, domain-specific | Can miss complex cases |

| Holistic mention pair | Considers whole document | May struggle with distant mentions |

| Entity-focused | Better with distant connections | Slightly less precise |

| Linguistic constraints | Consistent handling of known patterns | May not cover all cases |

| Hybrid | Combines strengths of multiple approaches | Can be complex to implement |

As NLP evolves, coreference resolution remains key to helping AI understand human language better.

Related video from YouTube

What is Coreference Resolution?

Coreference resolution is a crucial NLP task that links words or phrases referring to the same entity in a text. It's about connecting the dots between expressions that point to the same person, object, or concept.

Take this example:

"John loves his bike. He rides it every day."

Here, "He" refers to John, and "it" refers to the bike. Simple, right?

But why does it matter? Because it helps machines understand text like we do. It's not just about words; it's about grasping context and meaning.

Coreference resolution involves two main steps:

- Spotting potential referring expressions (like pronouns or names)

- Grouping these expressions when they refer to the same thing

It's not always easy, though. Language can be tricky:

- Words can be unclear (ambiguity)

- You need to understand the surrounding text (context)

- Sometimes, you need info beyond the text itself (world knowledge)

For instance:

"But days later, on 10 November, Mr. Morales stepped down and sought asylum in Mexico following an intervention by the chief of the armed forces calling for his resignation."

Here, "his" refers to Mr. Morales. But you'd need to know about world events to get that.

Why should you care? Because coreference resolution is key for:

- Information extraction

- Question answering systems

- Machine translation

- Text summarization

Better coreference resolution means better NLP tools overall.

As we dive into eight techniques for coreference resolution, remember: each method tackles these challenges differently. But they all aim to help machines understand text more like we do.

Getting Ready for Coreference Resolution

Let's set up for coreference resolution:

1. Pick a library

Stanford CoreNLP, spaCy, or OpenNLP are solid choices.

2. Install it

For NeuralCoref with spaCy:

pip install neuralcoref

3. Prep your environment

Make sure Python's installed and your dev setup is good to go.

4. Load models

Each library has its own. For Stanford CoreNLP:

java -cp stanford-corenlp-4.0.0.jar:stanford-corenlp-4.0.0-models.jar:* edu.stanford.nlp.pipeline.StanfordCoreNLP -annotators tokenize,pos,lemma,ner,parse,coref -coref.algorithm neural -file example_file.txt

5. Prep your text

Break it into sentences and tokens.

6. Pick your method

Here's a quick comparison:

| System | Language | Total Time | F1 Score |

|---|---|---|---|

| Deterministic | English | 3.98s | 49.5 |

| Statistical | English | 1.71s | 56.2 |

| Neural | English | 8.18s | 60.0 |

Speed or accuracy? Your call.

7. Test it out

Run a sample text to check if everything's working.

Coreference resolution isn't just about pronouns and nouns. It's about context, ambiguity, and sometimes even world knowledge.

Different methods have trade-offs. Rule-based? Fast but less accurate. Neural? More accurate but slower. Choose what fits your project.

Rule-Based Methods

Rule-based coreference resolution uses predefined algorithms and linguistic rules to spot coreferences in text. It's all about grammar rules and where words are placed.

Here's how to set up a rule-based system:

- Find potential entities

- Get their key features

- Clear up noun references with grammar rules

-

Use a multi-pass sieve approach:

- Start with exact matches

- Move to head matching

- End with pronoun resolution

During training, collect data on head word matches. This helps with a simple matching algorithm later.

Rule-based methods have pros and cons:

| Pros | Cons |

|---|---|

| Fast | Less accurate than ML |

| No training needed | Rules can be too rigid |

| Good for some languages | Struggles with complex references |

| High precision early on | Lower recall later |

| Easy to understand | Needs manual rule creation |

Take Stanford CoreNLP's deterministic system. It's quick (3.98 seconds) but scores just 49.5 F1. That's the trade-off.

These methods shine when mixed with machine learning. They're not perfect, but they're a solid start for coreference resolution.

2. Machine Learning Methods

Machine learning has become a powerhouse for coreference resolution in NLP. These methods learn from data to predict coreferences in new text.

Supervised Learning

Supervised learning uses labeled datasets to train coreference models. Here's the process:

1. Data prep

Models train on annotated corpora like MUC and ACE, which have marked coreferences.

2. Feature extraction

Models learn from:

- Lexical attributes (word forms, lemmas)

- Syntactic info (part-of-speech tags)

- Semantic attributes (named entity types)

3. Training

Common algorithms:

| Algorithm | How it works |

|---|---|

| Decision trees | Create decision-based tree models |

| SVMs | Classify data with hyperplanes |

| Neural networks | Process info through connected nodes |

4. Evaluation

F-scores measure model performance.

"Supervised coreference systems score between 0.7 and 0.8 in F-scores, showing high accuracy in identifying coreferential relationships."

Unsupervised Learning

Unsupervised methods find patterns without labeled data. They:

- Help when labeled data is scarce

- Often use clustering to group mentions

- Usually perform worse than supervised methods

Many systems combine rule-based and machine learning approaches for better results.

3. Deep Learning Methods

Deep learning has revolutionized coreference resolution in NLP. Let's dive into two key approaches: RNNs and BERT models.

Using RNNs

RNNs excel at coreference tasks due to their ability to handle text sequences. Here's a quick guide:

- Convert text to word embeddings

- Design an RNN that processes entire document context

- Train on labeled datasets

- Fine-tune based on test performance

RNNs are great for capturing context over long sequences, but they can struggle with very long documents.

Using BERT

BERT has taken coreference resolution to new heights. Here's how:

- Start with pre-trained BERT

- Fine-tune for your specific coreference task

- Create rich span representations

- Score mention pairs

BERT's bidirectional context understanding is its secret weapon for coreference tasks.

| Model | OntoNotes Improvement | GAP Improvement |

|---|---|---|

| BERT | +3.9 F1 | +11.5 F1 |

These numbers show BERT's coreference prowess. It's particularly good at distinguishing similar but different entities.

"BERT-large is particularly better at distinguishing between related but distinct entities compared to ELMo and BERT-base."

While BERT has made huge strides, there's still room for improvement in handling document-level context, conversations, and varied entity references.

Both RNNs and BERT have their strengths. RNNs shine with sequential data, while BERT excels at context understanding. Your choice depends on your specific task and resources.

4. Sieve-Based Methods

Sieve-based methods are like a series of filters for coreference resolution in NLP. They use multiple passes of rules to find and link related mentions in text.

Here's how they work:

- Set up sieves: Create a series of rule-based filters, ordered from most to least precise.

- Apply them in order: Run your text through each sieve, starting with the most precise one.

- Build on results: Each sieve uses info from the previous one.

- Refine and repeat: Tweak your rules and order to get better results.

Take the UET-CAM system used in the BioCreative V CDR task. It used nine sieves, including:

| Sieve | Rule Type | Example |

|---|---|---|

| 1 | ID matching | Exact string match |

| 2 | Abbreviation expansion | "FDA" → "Food and Drug Administration" |

| 3 | Grammatical conversion | Singular to plural forms |

| 4 | Synonym replacement | Using domain-specific synonyms |

Sieve-based methods have their ups and downs:

Pros:

- High precision in early sieves

- Easy to update

- Great for specific domains

- Can use expert knowledge

Cons:

- Might miss complex cases

- Needs careful rule design

- Can be slow

- Might not work well across different domains

These methods have shown good results. In the i2b2/VA/Cincinnati challenge, the MedCoref system scored an average F score of 0.84, landing in the middle of 20 teams.

For Indonesian language coreference resolution, a multi-pass sieve system scored a 72.74% MUC F-measure and a 52.18% BCUBED F-measure on 201 Wikipedia documents.

But remember: while effective, these methods often need fine-tuning and domain expertise to work their best.

sbb-itb-4f108ae

5. Holistic Mention Pair Models

Holistic mention pair models look at the whole document to solve coreference resolution. They're better at spotting connections that local models might miss.

Using These Models

Here's how to use holistic mention pair models:

- Train a classifier to spot coreferent mention pairs

- Look at the whole document, not just pairs

- Use the Easy-First Mention-Pair (EFMP) approach

- Include negative links for better results

- Form mention clusters to boost accuracy

Getting the Best Results

To squeeze the most out of these models:

- Mix different types of features

- Use machine learning (like SVMs)

- Add semantic info to your model

- Try beam search for decoding

- Check out the BestCut algorithm

- Play with feature combinations

Here's how an EFMP model performed on CoNLL data:

| Model | Development v4 | Test v4 | Test v7 |

|---|---|---|---|

| EFMP, all features, SVM | 60.02 | 59.12 | 55.38 |

| EFMP + JIM | 61.66 | 60.75 | 57.56 |

Adding Jaccard Item Mining (JIM) boosted the EFMP model's performance across the board.

6. Entity-Focused Methods

Entity-focused methods in coreference resolution look at the big picture. Instead of focusing on individual mentions, they zero in on entire entities within a text.

How to Use Entity-Focused Methods

Here's how to implement an entity-focused approach:

- Use Named Entity Recognition (NER) to spot entities

- Sort entities into categories (like person, organization, location)

- Look at the context around each entity

- Connect mentions that refer to the same entity based on context and type

Take the CorefAnnotator tool. It finds mentions of the same entity in a text - like when "Theresa May" and "she" are talking about the same person. It then creates a coreference graph, with the main words of mentions as nodes.

Comparing Methods

Let's stack entity-focused methods against mention-pair approaches:

| Aspect | Entity-Focused | Mention-Pair |

|---|---|---|

| Focus | Whole entities | Single mentions |

| Context | Global features | Local context only |

| Processing | Builds chains step-by-step | Scores all possible pairs |

| Performance | Better with distant connections | Might miss far-apart mentions |

A study showed entity-focused systems can outperform mention-pair ones:

| System | CoNLL Score |

|---|---|

| Entity-Focused | 52.5 |

| Mention-Pair | 30.4 |

The entity-focused system caught more connections but was slightly less precise.

Adding entity type info can boost performance even more. Khosla and Rose hit a CoNLL score of 80.26% on one dataset by using fine-grained entity typing.

"Adding NER style type-information to Lee et al. (2017) substantially improves performance across multiple datasets." - Khosla and Rose, Researchers

In short: Entity-focused methods look at the forest, not just the trees. They're proving to be a powerful tool in the coreference resolution toolkit.

7. Using Language Rules

Language rules are crucial for coreference resolution. They help link pronouns to the right antecedents, making NLP systems more accurate.

How to Use Language Rules

To nail language rules in coreference resolution:

- Check gender agreement

- Match number agreement

- Look at sentence structure

- Use meaning and context

Let's break it down:

Gender Agreement: Match pronouns to antecedents based on gender. Example: "John went to the store. He bought milk." "He" links to "John" because of gender.

Number Agreement: Make sure singular and plural forms match. Like this: "The cats are sleeping. They look comfortable." "They" refers to "cats" because of number.

Sentence Structure: Analyze how sentences are built. The CorefAnnotator tool creates a graph using main words as nodes, connecting related entities.

Meaning and Context: Use what words mean to improve accuracy. Knowing "The Seine" is a river helps link it to "This river" later on.

Mixing Rules and Stats

Combining language rules with stats can make coreference resolution even better:

- Start with rules

- Use stats for exceptions

- Keep testing and improving

The deterministic coreference system shows how this works. It uses rules and data files for things like demonyms, noun gender, and animacy to boost accuracy.

A study on French coreference resolution found some cool stuff:

| Feature | What it Does |

|---|---|

| Full NP coreference | Links noun pairs (e.g., "My cat... This animal") |

| Proper and common nouns | Connects entities (e.g., "The Seine... This river") |

| Null anaphora | Handles cases like "Peter drinks and ø smoke" |

This system uses multiple passes, starting simple and getting more complex as it goes.

8. Combined Methods

Want the best of both worlds in coreference resolution? Enter combined methods. These systems mix rule-based and machine learning techniques to pack a powerful punch.

Building a Combined System

Here's how to create a killer combined system:

- Lay down rule-based groundwork

- Add a machine learning layer

- Polish with more rules

Let's break it down:

Start with solid linguistic rules. Think gender and number agreement. Then, slap on a machine learning model trained on a massive corpus. Finally, use rules to clean up the ML output, especially for those tricky edge cases.

Take the University of Groningen's hybrid system. They beefed up a rule-based system with neural classifiers for mention detection, attributes, and pronoun resolution. The result? A serious performance boost.

Supercharging Your System

Want to take your combined system to the next level? Try these tricks:

- Use rules to cook up training data

- Apply linguistic filters (they can cut up to 92% of training material without losing oomph)

- Test on long documents (10,000+ words)

- Keep your rules fresh

- Retrain your ML models regularly

| Component | Job in Combined System |

|---|---|

| Rule-based | Handles known patterns consistently |

| Machine Learning | Tackles complex cases and new contexts |

| Linguistic Filters | Boosts precision, cuts noise |

| Neural Classifiers | Amps up specific subtasks |

Checking How Well Methods Work

Let's look at how we measure coreference resolution success and use that to improve our models.

Ways to Measure Success

We use four main metrics for coreference resolution:

- MUC (Message Understanding Conference)

- B-CUBED

- CEAF (Constrained Entity-Alignment F-Measure)

- BLANC (Bilateral Assessment of Noun-Phrase Coreference)

Here's a quick comparison:

| Metric | Measures | Good | Not So Good |

|---|---|---|---|

| MUC | Correct links in chains | Works with singleton clusters | Least discriminative |

| B-CUBED | Precision and recall of clusters | Entity-based | Affected by mention ID |

| CEAF | Entity-level evaluation | Focuses on entity structure | Can be hard to interpret |

| BLANC | Uses Rand index | Balanced approach | Sensitive to system mentions |

To use these metrics:

- Run your model on test data

- Compare to human-annotated "gold standard"

- Calculate metrics with specialized software

No single metric tells the whole story. That's why we often use an average of MUC, B-CUBED, and CEAF to rank resolvers.

Making Models Better

Got your metrics? Time to fine-tune. Here's how:

- Find error patterns: What does your model struggle with?

- Go for big wins: In one study, coreference resolution boosted fake news detection accuracy by 9.42% and F1 score by 15.58%.

- Use varied datasets: Try CoNLL-2012 Shared Task and GAP Coreference Resolution Dataset.

- Preprocess: Apply coreference resolution before classification for better results.

- Cross-validate: Use K-fold cross-validation for reliable performance estimates.

- Mix methods: Combine rule-based and machine learning approaches.

- Stay current: Keep up with new metrics and benchmarks.

Conclusion

Coreference resolution is a big deal in NLP. Here are the 8 main techniques:

- Rule-based methods

- Machine learning approaches

- Deep learning techniques

- Sieve-based methods

- Holistic mention pair models

- Entity-focused methods

- Linguistic constraints

- Hybrid approaches

These methods have made machines better at understanding language. Stanford CoreNLP and BERT-based models have really upped the accuracy game.

But this isn't just academic stuff. Coreference resolution is now crucial for:

- Chatbots

- Document analysis systems

- Healthcare AI assistants

Get this: it even boosted fake news detection accuracy by 9.42% and F1 score by 15.58% in one study.

So, what's next? The field is heading towards:

- Mixing symbolic and neural approaches

- Adding world knowledge

- Tackling ethical issues and biases

Multilingual and cross-lingual methods are also getting hot, thanks to the need to handle tons of data in different languages.

| Future Focus | What It Means |

|---|---|

| Fine-grained resolution | Better accuracy for tricky language structures |

| Contextual embeddings | Understanding context-dependent references |

| Cross-lingual resolution | Models that work in multiple languages |

| Event coreference | Linking mentions of the same event in text |

Bottom line: As NLP grows, coreference resolution will be key in helping AI understand and interact with human language.

FAQs

What is co referencing in NLP?

Coreference resolution in NLP finds words that refer to the same thing in text. It's how machines understand who or what we're talking about.

Here's what it does:

- Links words pointing to the same entity

- Creates "coreference chains"

- Clears up confusion in text

For example:

"John loves cycling. He rides his mountain bike often."

Here, "He" and "his" refer to "John". Coreference resolution connects these dots.

Why it matters:

- Helps summarize documents

- Improves question answering

- Boosts information extraction

It's made NLP tasks more accurate:

| Task | Improvement |

|---|---|

| Fake news detection | 9.42% more accurate |

| F1 score | 15.58% higher |

There are different ways to do this:

- Rule-based

- Machine learning

- Deep learning

Neural models are showing good results. Stanford CoreNLP data shows:

| System Type | F1 Score (English) |

|---|---|

| Deterministic | 49.5 |

| Statistical | 56.2 |

| Neural | 60.0 |

As NLP grows, coreference resolution will help AI understand human language better.

Related posts

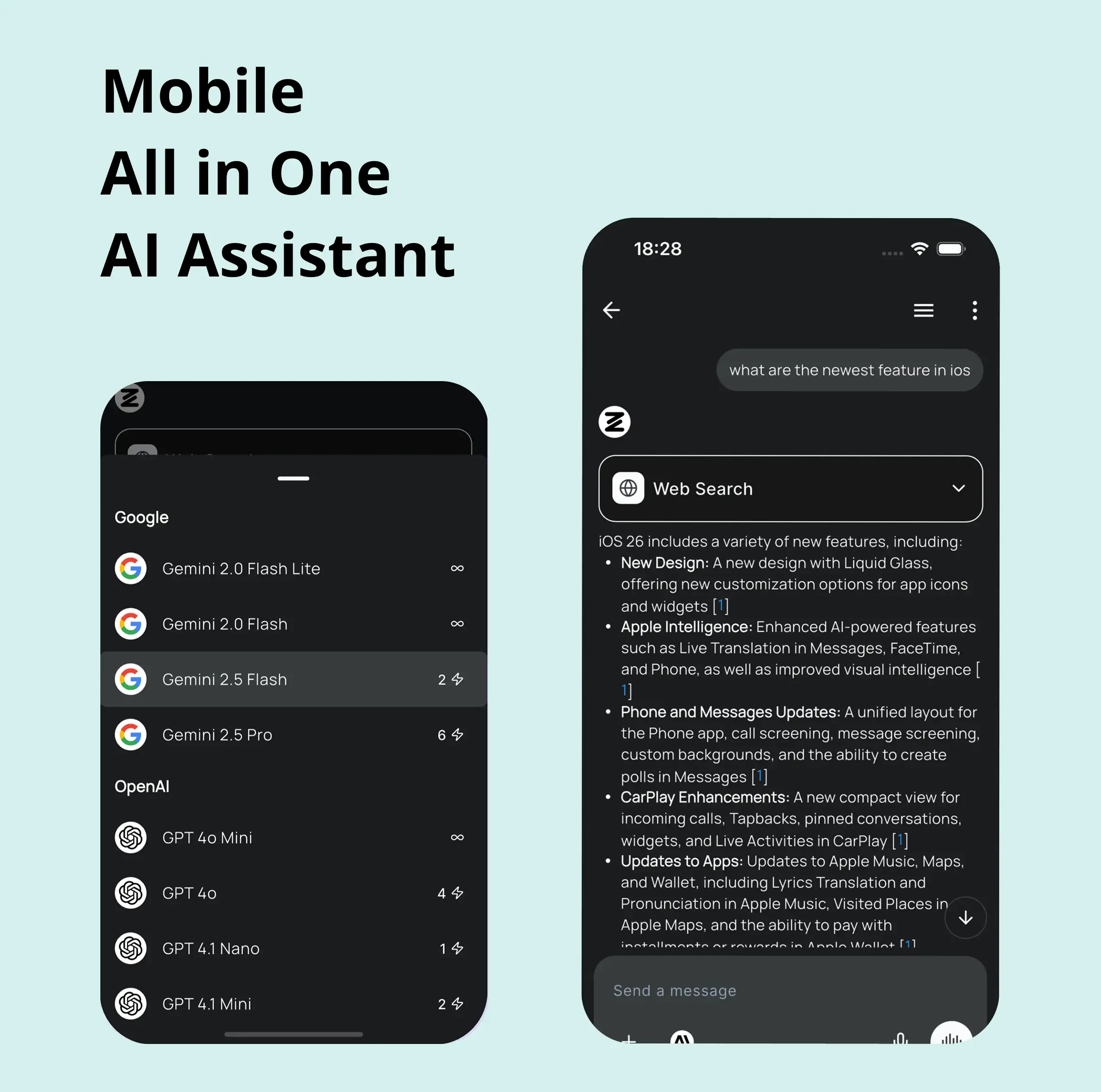

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...