At its heart, artificial intelligence image analysis is about teaching computers to see and understand the world just like we do. It’s the difference between a machine simply registering a collection of pixels and it actually recognizing the objects, patterns, and context within a picture. This ability is what allows for automated decisions on a massive scale, and it's a capability you can leverage directly through platforms like Zemith.

What Exactly Is AI Image Analysis?

Think about how a child learns to spot a cat. You don't describe the individual pixels in a photo; you just show them pictures of cats. Over time, they start to pick up on the key features—pointy ears, whiskers, a long tail. Artificial intelligence image analysis is built on a similar idea, but it operates with enormous computational power.

Algorithms are fed gigantic datasets of images, letting them learn to identify incredibly complex visual patterns with a level of speed and precision that’s far beyond human capability.

This isn't just a party trick for finding cats on the internet. It's about giving machines a practical way to process and make sense of visual information. For businesses, this opens the door to automating tedious tasks, pulling new insights from visual data, and simply making smarter decisions, faster. An actionable first step is identifying a repetitive visual task in your workflow that could be automated.

From Pixels to Patterns

The fundamental goal here is to convert raw visual chaos into structured, useful data. To a computer, a picture is just a giant grid of numbers that represent color and brightness. AI models, especially those based on deep learning, are trained to find the meaningful arrangements hiding within that grid.

Once a machine can do that, it unlocks some powerful business advantages:

- Automation at Scale: Think about tasks that used to take hours of manual review, like checking products for tiny defects or sorting through stacks of documents. AI can now handle that in seconds.

- Enhanced Accuracy: An AI model doesn’t get tired or distracted. It can catch subtle flaws or anomalies that a human might easily overlook, especially at the end of a long shift.

- Data-Driven Insights: By analyzing thousands of images, AI can spot trends that would be impossible to see otherwise, like which store displays are most effective at grabbing a customer's attention.

Making AI Accessible for Business Growth

Not long ago, getting into this kind of technology meant a huge investment in specialized hardware and a team of data scientists. Thankfully, that's no longer the case.

Modern AI platforms are designed to bring sophisticated image analysis tools to businesses of all sizes, turning complex algorithms into practical solutions for real-world challenges.

This is where platforms like Zemith come in. They provide the ready-made infrastructure and tools that let companies use artificial intelligence image analysis without having to build everything from scratch. From quality control on a factory floor to analyzing satellite imagery, these tools are becoming vital for staying competitive. The key is to leverage these platforms to test your ideas quickly with a pilot project.

To see how this is being applied in a specific field, explore AI's role in transforming drone flights and see how visual data is being put to work from the sky.

How a Computer Learns to See

How does a machine go from seeing a jumble of pixels to spotting a tiny flaw on a fast-moving assembly line? The process is a lot like how a small child learns to recognize objects for the first time. It all starts with the basics.

A toddler doesn't instantly know what a "house" is. They first learn to see simple things like lines, curves, and corners. Slowly, they piece those elements together to form more complex shapes—squares and triangles—and eventually, they can point to a drawing and say "house."

That layered learning is the core idea behind Convolutional Neural Networks (CNNs), the workhorse of modern artificial intelligence image analysis. A CNN looks at an image in stages. The first few layers might only spot simple edges or changes in color. But the deeper layers combine that information to recognize textures, patterns, and, finally, complete objects like a face or a car.

The Training Ground for AI Vision

Of course, for an AI to learn anything, it needs a good teacher. In this case, the teacher is data—lots and lots of it. The learning process isn't some black-box magic; it's a methodical, three-step journey that turns a blank-slate model into a highly skilled visual expert.

It's this training methodology that’s fueling the entire field's growth. The global market for AI-based image analysis was valued at USD 13.07 billion in 2023 and is on track to hit USD 36.36 billion by 2030. That explosion is all thanks to the power of these machine learning techniques. You can read the full research about these market trends to get a sense of just how quickly the industry is adopting this tech.

Here’s a breakdown of how that training actually works:

Gathering and Labeling Data: It all begins with collecting a huge library of images. Humans then have to meticulously label each one, essentially pointing out what the AI should be looking for. If you're building a system to find manufacturing defects, each image in your dataset gets tagged as either "defective" or "non-defective."

Training the Model: Next, this labeled dataset is fed into the AI. The model churns through the images, hunting for the statistical patterns that consistently show up for each label. It tweaks its internal settings over and over, getting a little bit smarter with every pass.

Validating Accuracy: Once the training wheels are off, the model is tested on a fresh set of labeled images it has never encountered before. This is the final exam. It proves the model has actually learned the underlying patterns and hasn't just memorized the training answers.

Accelerating the Learning Curve

As you can imagine, this whole process can be a heavy lift. It's often slow, demands a ton of computing power, and requires some serious technical know-how. For most organizations, building a model from scratch just isn't realistic. It’s a huge barrier that can stop a great idea in its tracks.

The key to making AI image analysis accessible is to bypass the most difficult parts of the training process. By starting with a solid foundation, businesses can focus on solving their specific problems instead of reinventing the wheel.

This is where a platform like Zemith really shines. Instead of making you start from square one, Zemith gives you access to pre-trained models and easy-to-use workflows. These models have already learned the fundamentals of vision from massive datasets, so you can fine-tune them for your unique task with far less data and effort. This allows you to rapidly prototype a solution and see tangible results quickly.

This approach dramatically cuts down development time, letting you get powerful artificial intelligence image analysis solutions up and running much more quickly and cost-effectively.

How AI Image Analysis Actually Works: A Step-by-Step Look

Turning a raw image into a meaningful insight isn't magic—it's a well-defined process. This workflow, often called a pipeline, is a predictable series of steps that takes visual data from its original state and turns it into intelligence you can actually use. Getting a handle on this journey is crucial to understanding how artificial intelligence image analysis produces real-world results.

Think of it like a recipe. Every ingredient and every step is vital for the final dish. If you skip a stage or rush through it, the quality of the outcome suffers. In the same way, a solid AI workflow ensures the final analysis is accurate, reliable, and genuinely useful for making decisions.

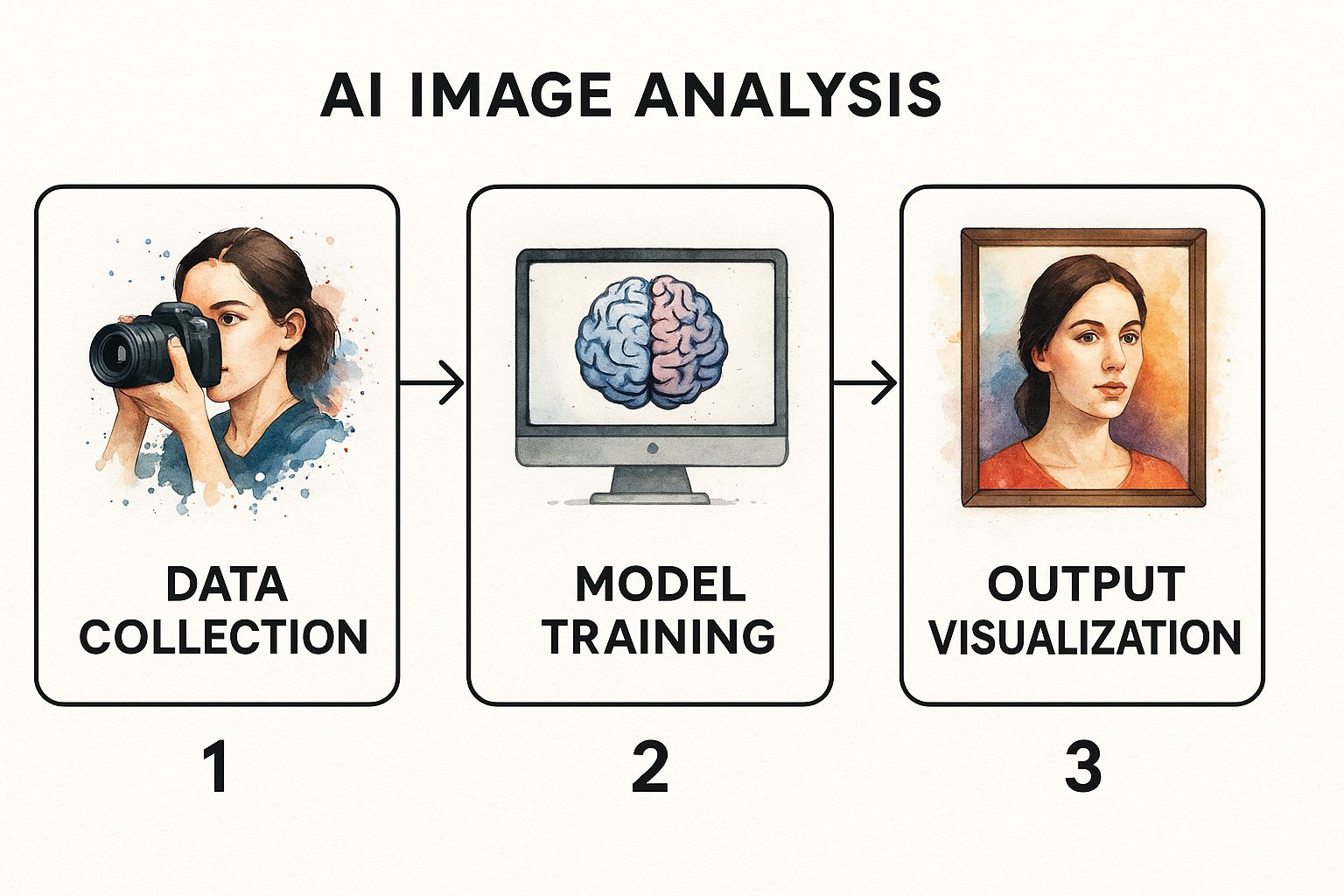

This visual gives you a high-level view of the core stages in a typical AI image analysis pipeline, from gathering the initial data all the way to producing the final results.

As the infographic shows, it’s a methodical process. Each step builds directly on the one before it, which is why a structured approach is so critical for success.

A typical artificial intelligence image analysis pipeline consists of several key stages, each with a specific job to do. Let’s break down the 5 main steps that take an image from a simple file to a powerful business tool.

The 5 Stages of an AI Image Analysis Pipeline

| Stage | Purpose | Example Task |

|---|---|---|

| 1. Data Acquisition | Gather all relevant visual data for the project. | Collecting thousands of images of product defects from a factory assembly line. |

| 2. Preprocessing | Clean, standardize, and prepare raw images for the model. | Resizing all images to a uniform 256x256 pixels and adjusting brightness. |

| 3. Feature Extraction | Identify and isolate the most important visual patterns in the images. | The model learns to detect the specific edges, textures, and colors that define a "crack." |

| 4. Model Training & Validation | Teach the AI to recognize patterns and test its accuracy. | Feeding the model labeled images of "cracked" vs. "not cracked" parts and then testing it on a new set of images it hasn't seen before. |

| 5. Inference & Output | Apply the trained model to new, unseen data to generate predictions. | A live camera feed sends images to the model, which flags a product as "defective" in real-time. |

Ultimately, a well-managed pipeline is what makes AI practical. It ensures that the model isn’t just a black box but a reliable system that consistently delivers value.

Stage 1: Getting Your Data in Order

It all starts with the data. This could be any visual information you need to analyze—satellite imagery, medical scans, security camera footage, or even photos of products on a shelf. The quality and quantity of this initial data are, without a doubt, the most important factors for success.

But raw images are rarely ready to go right out of the box. They often need a lot of cleaning and organizing, a step we call preprocessing. It’s like the prep work in a professional kitchen; it isn't glamorous, but it’s absolutely essential.

Preprocessing involves tasks like:

- Resizing and Normalizing: Making sure all images have a consistent size and format.

- Removing Noise: Cleaning up blurry or grainy images to give the model a clearer view.

- Data Augmentation: Artificially creating new versions of your existing images (like rotating or flipping them) to expand the dataset and build a more robust model.

Stage 2: Teaching the AI to "See"

Once your data is clean and prepared, it's time to train the AI model. This is where the machine learns to recognize the patterns that matter by analyzing the images you've fed it. The model constantly adjusts its internal settings until it can accurately perform its assigned task, whether that’s spotting defects on a production line or classifying different types of cells in a microscope slide.

After the initial training, the model goes through a critical validation phase. We test it against a completely new set of images it's never seen before to see how it performs in a real-world scenario. This step is vital to make sure the model has actually learned to generalize and not just memorized the training examples.

This loop of training, testing, and refining is fundamental. A model's accuracy isn't a one-and-done deal; it's the result of continuous improvement, which is why having a flexible platform is so important for long-term success.

Stage 3: Putting the Model to Work

The final stage is inference, where the fully trained model gets put to work on new, live data. This is the payoff. The AI analyzes incoming images and produces an output—a classification, an object detection, or a segmented area. For example, it might flag a product on a conveyor belt as "defective" or draw a box around a car in a video stream.

But the output itself isn’t the end of the story. The real value comes from interpreting these results and turning them into action. A platform like Zemith is designed to simplify this entire pipeline. It offers tools for easy data management, provides pre-trained models to speed up development, and includes intuitive dashboards to help you visualize and act on the model's outputs. This makes sophisticated artificial intelligence image analysis something any organization can achieve.

The 4 Core Tasks of AI Image Analysis

Artificial intelligence image analysis isn't some single, magical process. It's more like a specialized toolkit, with each tool built for a very specific job. Getting a handle on these core tasks is the first real step toward figuring out which one can solve your particular business problem.

Most challenges that involve visual data can be tackled by one of four primary functions. By breaking the technology down this way, you can go from a fuzzy idea like "we should use AI" to a concrete plan of action.

Let's dive into what each of these capabilities does, using some practical, real-world scenarios.

1. Image Classification: What Is This a Picture Of?

Image classification is the most straightforward task of the bunch. Think of it as the AI looking at a photo and giving it one simple, high-level tag. The goal is to answer the basic question: "What's the main subject here?"

The model analyzes the entire image and assigns it to a pre-approved category, like "dog," "car," or "building." It won't tell you where the dog is in the photo, just that the photo is, in fact, of a dog.

- Business Scenario: A social media platform needs to categorize millions of user-uploaded photos every day. An image classification model can instantly sort images into folders like "landscape," "food," "portrait," or even flag them as "inappropriate." This makes content moderation scalable and lightning-fast. With a tool like Zemith, you could build a workflow to handle this automatically, no human intervention required.

2. Object Detection: Where Are the Items in This Picture?

Object detection takes things a step further. It doesn't just tell you what's in an image; it also tells you where it is by drawing a little box around it. This task answers the question, "What objects are in this scene, and what are their locations?"

If you have a picture with three cars and two pedestrians, an object detection model will find all five of them and draw a neat rectangle around each one. This is absolutely critical for any application that needs to count or track individual items.

- Business Scenario: A retail chain wants to keep its shelves stocked. Cameras running an object detection model can constantly scan the aisles, identify every product, and keep a running tally. The moment a popular soda drops below a certain number, the system automatically sends an alert to an employee's handheld device to restock it, preventing a lost sale.

The growth in this area is staggering for a reason. AI-based image recognition, which leans heavily on object detection, is projected to be a USD 4.97 billion market in 2025 and is expected to nearly double to USD 9.79 billion by 2030. This boom is driven by services that make it easier to build and manage these models. You can discover more insights about the AI recognition market and its incredible expansion.

3. Image Segmentation: What Is the Exact Outline of This Item?

While object detection gives you a rough box, image segmentation is all about precision. It creates a pixel-perfect outline of an object, answering the question, "What is the exact shape and boundary of everything in this image?"

It's like digitally tracing around an object with perfect accuracy. This level of detail is essential when an object's precise shape, size, or orientation matters—think of a medical AI outlining a tumor in an MRI scan or a self-driving car identifying the exact form of a cyclist.

Image segmentation provides the highest level of detail, moving beyond simple identification to a granular understanding of an object's form. This precision unlocks advanced analytical capabilities that are impossible with bounding boxes alone.

- Business Scenario: An agricultural tech company uses drones to monitor crop health. An image segmentation model can analyze the aerial photos and perfectly outline every single plant in a field. By calculating the exact area of healthy green leaves versus yellowing, unhealthy ones, farmers can target their application of water or fertilizer with surgical precision, saving money and boosting their crop yield.

4. Feature Recognition: What Specific Details Are Present?

Feature recognition is about zooming in on the fine-grained details. You might know it by other names, like Optical Character Recognition (OCR) or facial recognition. This task is all about extracting very specific information, like reading the text off a license plate or identifying a particular person's face.

It goes beyond a generic label like "person" or "document" to pull out actionable data. It answers the question, "What specific text, faces, or unique features are in this image?"

- Business Scenario: A logistics company is automating its sorting facility. An AI model using feature recognition scans the shipping label on every package that flies by on a conveyor belt. It instantly reads the address, tracking number, and barcode. That data is then fed into the system to automatically route the package to the right truck, massively increasing speed and cutting down on human error.

Each of these four tasks offers a different way to pull value from visual data. The real trick is matching the right tool to your business goal. A platform like Zemith offers specialized models for each of these core functions, helping you pick and implement the perfect artificial intelligence image analysis solution for whatever you're trying to build.

AI Image Analysis in Action Across Industries

Theory is one thing, but seeing technology solve real problems is where the magic happens. The true power of artificial intelligence image analysis isn't just in the lab; it’s out in the world, tackling difficult challenges and opening up new possibilities. This tech is already a game-changer across wildly different fields.

From helping doctors diagnose diseases faster to making sure the products we buy are up to snuff, AI-powered visual tools are working behind the scenes in ways you might not expect. Let's look at a few mini-case studies to see how this technology is making a real difference in healthcare, retail, and manufacturing.

Revolutionizing Medical Diagnostics

In medicine, every second counts, and accuracy is everything. Think about radiologists, who spend their entire day poring over complex scans like X-rays and MRIs, hunting for tiny, almost invisible signs of disease. It’s a pressure-cooker job where human fatigue can have devastating consequences.

This is where AI image analysis comes in as a brilliant co-pilot. An AI model, trained on a massive library of millions of medical images, can review a patient's scan in a flash, flagging areas of concern that a human might overlook. It’s like having a second set of tireless, expert eyes, which boosts diagnostic accuracy and frees up doctors to concentrate on creating treatment plans.

The impact is huge, especially in highly specialized areas. Take retina image analysis, for example. That market was valued at an estimated USD 2.65 billion in 2023 and is expected to soar to USD 9.41 billion by 2033. This boom is fueled by AI systems that are incredibly good at spotting conditions like diabetic retinopathy and macular degeneration from retinal scans.

Transforming the Retail Experience

The retail world is drowning in visual data, from the products lining store shelves to the endless scroll of online catalogs. Artificial intelligence image analysis is helping retailers build smarter, more responsive operations that ultimately make shopping better for all of us.

One of the coolest applications you've probably already seen is visual search. Instead of fumbling for the right keywords to describe that jacket you saw someone wearing, you can just snap a picture and upload it. An AI model analyzes the image and, seconds later, shows you similar items from the store’s inventory. This is a perfect example of a high-impact feature you can build and test using a platform like Zemith.

But the real workhorse applications are happening behind the scenes, solving age-old retail headaches like empty shelves.

- Automated Inventory Checks: Smart cameras with object detection can continuously scan shelves to track stock levels.

- Real-Time Alerts: As soon as an item is running low, the system pings an employee to restock it, preventing those frustrating "out of stock" moments.

- Planogram Compliance: AI can even check if products are displayed according to the store's layout plan, keeping the shopping experience consistent.

Ensuring Quality in Manufacturing

On a busy factory floor, quality control is a constant battle. Having people manually inspect products is slow, costly, and, well, human. We get tired and make mistakes, especially when defects are too small for the naked eye to see. A single bad part can trigger expensive recalls and tarnish a company’s reputation.

This is why many manufacturers are now putting AI-powered cameras right on their assembly lines. Using techniques like image segmentation, these systems inspect every single item as it goes by, spotting microscopic cracks, subtle misalignments, or other flaws with a level of precision that a person just can't match.

By catching subtle flaws in real-time, manufacturers can significantly reduce waste, improve product reliability, and ensure that only perfect items leave the factory. This level of quality control was simply not possible before AI.

Beyond these core areas, AI image analysis pops up in more creative fields, too, like helping creators understand and improve their visual storytelling. Whether it’s in a sterile cleanroom or a chaotic supermarket, the ability to make sense of images is creating enormous value. These examples just scratch the surface of this technology's versatility, and a flexible platform like Zemith offers the building blocks to create custom solutions for just about any industry.

Putting AI Image Analysis to Work for You

So, how do you actually get started with artificial intelligence image analysis? It’s tempting to jump straight into the tech, but the most important first step has nothing to do with algorithms. It starts with a simple, focused question: What problem am I actually trying to solve?

Getting that question nailed down is everything. Once you know what you’re aiming for, you can look at your data. Do you have the right kinds of images? Are they organized and labeled in a way a machine can make sense of? Think of it this way: high-quality, relevant data is the fuel for any AI engine. Without it, you’re not going anywhere.

Choosing Your Implementation Path

With a clear goal and your data in hand, you’ve got a few ways you can go. There are really three main paths, each with its own balance of cost, speed, and control.

- Build from Scratch: This gives you total control and a perfectly tailored solution, but it’s a heavy lift. You’ll need a specialized team, serious computing power, and a lot of time. This is the path for massive companies with very unique, mission-critical needs.

- Use Simple APIs: For straightforward tasks like basic object detection, off-the-shelf APIs are fantastic. They’re fast and easy to plug in. The downside? They’re a one-size-fits-all solution and can’t be easily customized for your specific business context.

- Partner with a Platform: This is the sweet spot for most businesses. A good platform gives you the power of a custom-built system without the headaches. It’s far more flexible than a simple API but doesn’t require you to build everything from the ground up.

A platform approach bridges the gap perfectly. It delivers powerful, pre-built tools that you can fine-tune to solve your specific challenges, helping you get results much faster without sacrificing quality.

Starting a Pilot Project with Zemith

The smartest way to begin is with a small, focused pilot project. It's the perfect way to test your idea, measure the results, and prove the value without betting the farm.

This is where a platform like Zemith really shines. It pulls everything you need into one place—tools to manage your data, access to powerful pre-trained models, and a way to analyze the output. It makes taking that first step into artificial intelligence image analysis feel manageable and, honestly, pretty exciting.

By handling the heavy technical lifting, Zemith lets you focus on what really matters: the business outcome. You can experiment with different models and tweak your approach without needing a whole data science department on day one. For a deeper look at the kinds of tools that can help shape your initial research, our guide on AI tools for research is a great place to start.

Got Questions About AI Image Analysis? Let's Clear Things Up.

Diving into the world of AI image analysis can bring up a lot of questions. That’s perfectly normal. Getting straight answers to these common concerns is the best way to cut through the noise and see how this technology can actually work for you. Let's tackle a few of the questions we hear most often.

A big one right off the bat is the difference between computer vision and AI image analysis. It's easier than it sounds. Think of computer vision as the entire scientific field—the broad discipline of teaching computers how to see and understand the visual world. AI image analysis is a specific, practical part of that field, where we use that "sight" to pull valuable information out of images to solve real problems.

"How Much Data Do I Really Need to Get Started?"

There's a persistent myth that you need a mountain of data—we're talking millions of images—to even think about using AI. While that’s true if you're building a brand-new model from absolute scratch, today’s technology offers a much smarter way in.

We can now use powerful, pre-trained models as a starting point, which completely changes the equation. For most business-specific tasks, you can get incredible accuracy by fine-tuning one of these existing models with just a few hundred relevant, properly labeled images. It turns out that the quality of your data matters a whole lot more than the quantity. A platform approach makes this even easier, giving you access to robust models that just need a small, focused dataset to learn your specific use case.

The game has changed. We've moved from needing massive, general-purpose datasets to succeeding with smaller, high-quality, task-specific ones. This shift makes AI image analysis far more accessible, letting businesses get pilot projects off the ground and prove the value fast.

"What's This Actually Going to Cost Me?"

The price tag for AI image analysis can swing wildly depending on the path you take.

Building an in-house solution from the ground up is easily the most expensive option. You’re looking at major investments in hiring specialized AI talent, setting up powerful computing infrastructure, and enduring long, costly development cycles. It's a path that can quickly run into the hundreds of thousands of dollars.

On the other end, using a simple off-the-shelf API seems cheaper initially but offers very little customization and can get surprisingly expensive as you scale up your usage. For most companies, a platform-based subscription model hits the sweet spot. It gets rid of the huge upfront costs in favor of a predictable monthly or annual fee. This bundles everything you need—the infrastructure, the pre-trained models, and the expert support—into one package, dramatically shortening your time to see a real return on your investment.

Ready to see how Zemith can answer all your questions and simplify your workflow? Our all-in-one AI platform integrates powerful image analysis tools with everything else you need for research, creativity, and productivity. Stop juggling multiple subscriptions and discover a smarter way to work by visiting the Zemith website.