How to Conduct Usability Testing for UX That Actually Works

Learn how to conduct usability testing from start to finish. Our guide provides practical, actionable steps for planning, running, and analyzing user tests.

Ever launched a feature you were sure was a work of genius, only to watch users click around in circles like they're lost in a digital corn maze? We’ve all been there. That sinking feeling is a classic sign that a crucial step was missed somewhere along the way.

The secret sauce that separates beloved products from those that just gather digital dust is, more often than not, usability testing.

Why Usability Testing is Your Product’s Secret Weapon

Before we get into the nuts and bolts of how to do it, let's unpack why this practice is so essential. Think of it as an insurance policy against bad UX. It’s the only real bridge between what you think your users want and what they actually need to get things done.

Usability testing is all about watching real people try to use your product to accomplish specific goals. The process is pretty straightforward: you define what you want to learn, find people who represent your target audience, give them realistic tasks, and then watch what happens. The magic is in analyzing their feedback to find those golden nuggets of insight that lead to real improvements.

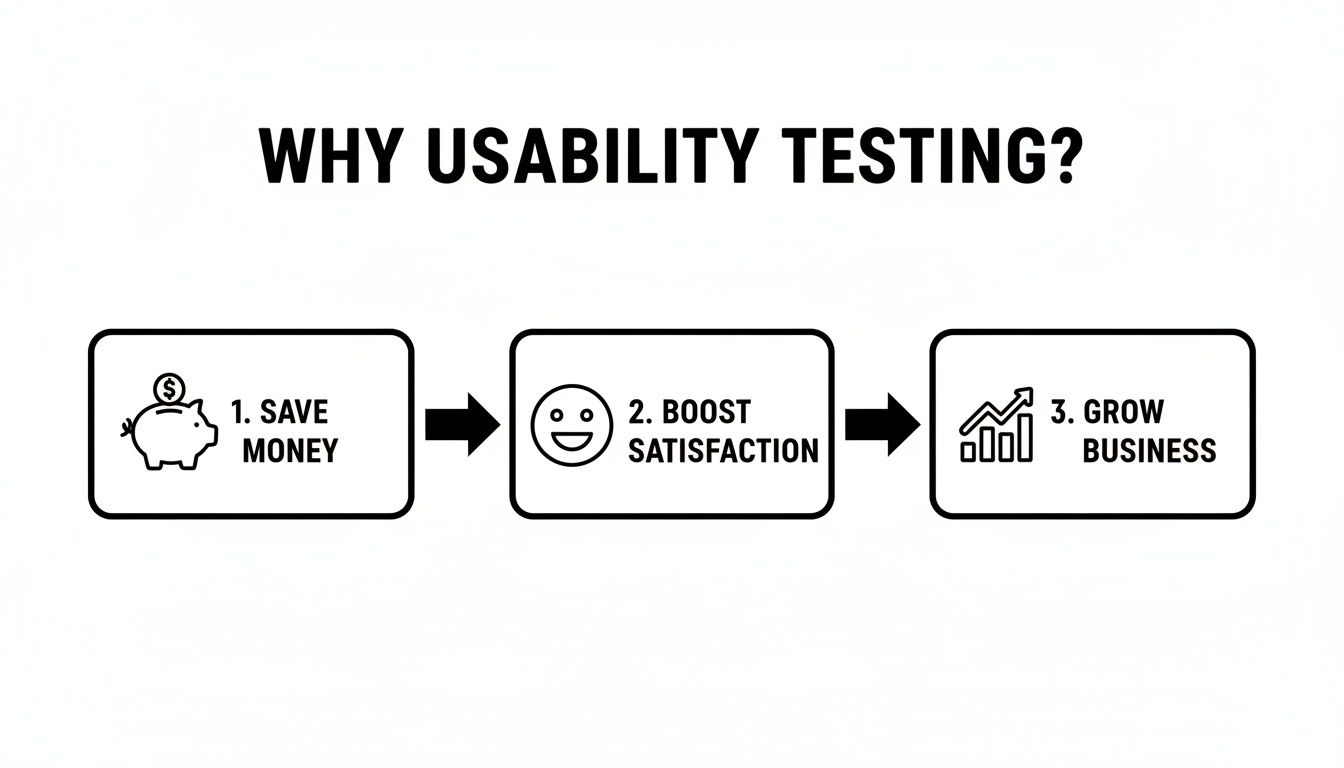

From a "Nice-to-Have" to a Must-Have

In the past, usability testing might have been seen as a luxury. Not anymore. Today, it’s a core practice for any product that wants to compete. Watching real people interact with your work is the most direct way to save your team from expensive redesigns down the road, make your users happier, and ultimately, grow your business.

For a platform like Zemith, which has to balance powerful tools like an AI Coding Assistant and a Smart Notepad, making sure the user experience is seamless isn't just a goal—it’s everything. Actionable insights from testing are what turn a cool feature into an indispensable tool.

The data backs this up. A whopping 67% of organizations now run usability tests as a standard part of their process. This isn't just a slow trend; adoption jumped by 25-30% after 2020 as teams got comfortable with remote testing. You can see more on this in this breakdown of software testing statistics.

The Real-World Payoff

Flying blind is never a good strategy, but that's exactly what you're doing when you ignore user feedback. You might be moving forward, but you have no idea if you're about to fly straight into a mountain. And let's be honest, no one looks good picking product features out of their teeth.

Here’s a look at what you stand to gain by opening your eyes:

- You save a ton of time and money. Finding a design flaw in a simple prototype is exponentially cheaper than fixing it after it’s already been coded, tested, and shipped.

- Your users will be happier. It's a simple formula: when a product is easy and intuitive, people actually enjoy using it. Happy users stick around and become your biggest advocates.

- You'll see real business growth. Happier users lead directly to better reviews, higher retention, and more revenue. To see how this plays out, check out this case study on driving growth through strategic UX optimization.

Here's a quick summary of why you should never skip usability testing.

The 'Why' Behind Usability Testing at a Glance

| Benefit | Impact on Your Product | Real-World Example |

|---|---|---|

| Reduces Risk | Prevents launching features that miss the mark or confuse users. | A fintech app tests a new "Send Money" flow and discovers users can't find the final confirmation button, preventing a costly post-launch fix. |

| Saves Resources | Identifies and fixes issues early, when they're cheap and easy to solve. | A team spends two hours tweaking a Figma prototype based on feedback, saving 40+ hours of engineering and QA time. |

| Increases Adoption | A smooth, intuitive onboarding experience encourages new users to stick around. | A project management tool sees a 20% increase in user retention after simplifying its initial project setup process based on test findings. |

| Improves Satisfaction | Creates a product that feels helpful and easy, not frustrating. | An e-commerce site learns that customers abandon carts because the shipping cost is shown too late. They move it earlier and see a drop in cart abandonment. |

Ultimately, it all comes down to grounding your product decisions in what people actually do, not just what they say or what you assume they'll do.

By grounding your product decisions in real user behavior, you move away from guesswork and toward a process of continuous, validated improvement. It’s a foundational element of building products people truly love.

This mindset is the cornerstone of building a product that lasts. To learn more about this approach, read our guide on how to use evidence-based decision making.

Building Your Usability Testing Game Plan

Jumping into testing without a plan is like trying to bake a cake by just throwing ingredients in a bowl. It’s messy, unpredictable, and you’ll probably end up with something nobody wants. This section is your recipe for success, helping you build a solid framework so your tests are targeted, efficient, and deliver insights you can act on immediately.

First up: you need a clear goal. What mystery are you actually trying to solve? Vague goals like "see if the website is easy to use" will get you vague, useless feedback. You have to get specific.

For example, if you're working on a platform like Zemith, a good goal might be: "Can a new user successfully create a project, upload a PDF to the Library, and use the Document Assistant to summarize it in under three minutes?" That’s a sharp, measurable question you can actually answer.

Defining Your Goals and Metrics

Before you even think about recruiting participants, you have to define what success looks like. This means setting clear objectives and picking the right metrics to measure them. Without this, you’re just collecting opinions, not data.

Think about what you truly need to learn. Are you testing a brand-new feature or refining an existing one? Your focus will shift depending on the answer.

- For a new feature: You'll likely want to focus on discoverability and initial comprehension. Can users even find the new "AI Live Mode"? Do they understand what it does without a tutorial?

- For an existing feature: You might be more interested in efficiency and error rate. How many clicks does it take for a user to find a past conversation in their Smart Notepad? Where are they getting stuck?

A great test plan doesn't just ask, "Do they like it?" It asks, "Can they use it effectively to solve their problem?" The answer to that second question is where the real value lies.

Metrics are your scoreboard. They can be qualitative (the "why") or quantitative (the "how much"). Common metrics include task success rate, time on task, error rate, and user satisfaction scores. Understanding industry benchmarks can also provide crucial context for your results.

For example, it's helpful to know where you stand. The average System Usability Scale (SUS) score, a global benchmark for product intuitiveness, is 68. A newer metric, the Accessibility Usability Scale (AUS), averages 65, but it also reveals a stark reality: 1 in every 2 screen reader users scores 56 or below, highlighting a critical need for more inclusive design.

Choosing Your Testing Method

Once you've nailed down your goals, it's time to pick your method. Don't worry, you don't need a fancy lab with two-way mirrors (unless you want to feel like a secret agent). The best approach really depends on your goals, budget, and timeline.

The core decision usually comes down to two main questions: moderated or unmoderated? Remote or in-person?

- Moderated vs. Unmoderated: A moderated test is like having a co-pilot. A facilitator guides the participant, asks follow-up questions, and can probe deeper into their thought process in real time. An unmoderated test is more like a self-guided tour; the user completes tasks on their own, often using software from platforms like UserTesting or Maze that records their screen and voice.

- Remote vs. In-Person: A remote test happens wherever the user is, using their own device. This is great for getting natural feedback from a diverse group across different locations. An in-person test brings the user to you, giving you more control over the environment and the ability to observe body language.

To help you decide, here's a quick breakdown of the most common methods.

Choosing Your Usability Testing Method

A comparison of the most common usability testing methods to help you decide which is right for your project goals and resources.

| Method Type | Best For... | Pros | Cons |

|---|---|---|---|

| Moderated In-Person | Deep qualitative insights, complex prototypes, and observing non-verbal cues. | - Rich, detailed feedback. - Ability to ask follow-up questions. - High control over the test environment. | - Expensive and time-consuming. - Geographically limited. - Smaller sample sizes. |

| Moderated Remote | Getting deep insights from a geographically diverse audience. | - Cheaper than in-person. - Access to a wider pool of participants. - Still allows for direct interaction. | - Tech issues can be a problem. - Misses some non-verbal cues. - Requires scheduling across time zones. |

| Unmoderated Remote | Quick, quantitative data on specific tasks and testing with large sample sizes. | - Fast and cost-effective. - Large sample sizes are possible. - Participants are in their natural environment. | - No ability to ask "why." - Risk of poor quality feedback. - Limited to simple, clear tasks. |

Ultimately, there's no single "best" method. The right choice is the one that best serves your research questions while fitting within your real-world constraints of time and money.

As you can see, these efforts directly tie into core business outcomes, connecting a better user experience with tangible results like saving development costs and boosting customer satisfaction.

Your test plan is the bridge between a vague idea and a structured, insightful study. This document gets everyone on the same page and is a critical part of any successful design process. To learn more about how this fits into the bigger picture, check out our guide on the design thinking process steps. A solid plan is your best defense against wasting time and resources on tests that don't deliver.

Finding the Right People for Your Test

So, you've got a brilliant test plan. Your goals are sharp, your methods are chosen, and you're ready to uncover some game-changing insights. But hold on a second. All that careful planning is completely useless if you put it in front of the wrong people.

Testing your super-niche developer tool with your Aunt Carol who still thinks "the cloud" is in the sky? You'll get feedback, sure, but it won't be the feedback you need. Finding participants who actually represent your target audience is probably the most critical part of getting valuable results.

Ditching the Myth of a Million Testers

Let's bust a common myth right now: you do not need an army of testers. In fact, research from the Nielsen Norman Group has famously shown that you can uncover about 85% of usability problems with just five users.

That’s right, just five. After that, you start seeing the same issues pop up over and over again.

This "magic number 5" is a lifesaver for teams with tight timelines and budgets. The goal isn't to find every single tiny flaw in one go. It's to identify the biggest roadblocks, fix them, and then test again if needed. This iterative approach is far more effective than one massive, expensive study.

Crafting the Perfect Screener Survey

Before you can get that killer feedback, you have to filter out the wrong people. This is where a screener survey comes in. Think of it as a bouncer for your usability test, making sure only the right folks get past the velvet rope.

Your screener should be short and designed to identify key characteristics without giving away what you're really looking for. When defining who you need, understanding powerful audience segmentation strategies can help you zero in on the most relevant groups.

Let’s imagine we’re testing a new Whiteboard feature in Zemith, built for creative collaboration. A good screener might ask:

- How often do you collaborate with others on creative projects? (Behavioral)

- Which of these tools have you used for brainstorming in the last month? (Tool usage)

- What's your current job title? (Demographic/Professional)

Notice we aren't asking, "Do you want to test a new whiteboard tool?" That just encourages people to tell you what they think you want to hear. Instead, you're looking for real-world behaviors and traits that match your ideal user persona.

Where to Find Your Ideal Participants

Once your screener is ready, it's time to go fishing. Finding the right participants can seem daunting, but there are plenty of ponds to choose from.

- Your Existing User Base: Your own customers are a goldmine. Send an email to a segment of your users who fit the profile.

- Social & Community Platforms: Places like LinkedIn, relevant subreddits, or specialized Slack communities are great for finding professionals.

- Recruitment Services: If you have a budget, services like User Interviews or Respondent.io can find highly specific participants for you, handling all the logistics and incentives.

Pro-Tip: Always recruit a few extra participants. Life happens. People get sick, forget appointments, or get abducted by aliens (probably). Having a backup or two ensures your schedule stays on track and you don't lose a valuable session.

The Not-So-Scary Paperwork

Once you’ve found your people, there are two final but crucial steps: consent and compensation.

A consent form is absolutely non-negotiable. It protects both you and the participant by clearly outlining the test's purpose, how their data will be used, and confirming they can stop at any time. Keep it simple and use plain language—not legalese.

Incentives show you value their time. This doesn't have to be cash. Gift cards, a free month of your service, or even a donation to a charity of their choice are all great options. Fair compensation is key to getting thoughtful, engaged participants.

This entire process of finding and preparing participants is a huge part of the overall research effort. We've barely scratched the surface here; there's a whole art and science to how to conduct user research effectively that goes even deeper.

How to Run a Test Without Influencing Users

Alright, it's showtime. You've done the planning, found the right people, and now it's time to actually sit down and get the insights that will shape your product. This is where the magic happens, but it's also where a session can go off the rails if you're not careful.

Your role as a moderator is to be a neutral, friendly guide—not a teacher, a salesperson, or a cheerleader. The goal is to create a comfortable space where your participant feels safe enough to think out loud and be brutally honest. Some of the most valuable feedback comes from moments of confusion or frustration, so your job is to make it okay for them to struggle.

The Art of Moderation

Being a great moderator is less about what you say and more about how you create space for the participant to think and act naturally. Your best tools are open-ended questions and a genuine comfort with silence. It's a tricky balance; you have to guide the session without leading the witness.

Always remember: you are not the one being tested. The participant isn't being tested, either. The product is. Kicking off the session by explicitly stating this can completely change the dynamic and put people at ease.

A few key skills will make a world of difference:

- Ask open-ended questions. Instead of a leading question like, "Was that easy?" try something more open: "How did that compare to what you expected?"

- Use neutral phrasing. Ditch words with built-in judgment, like "good," "correct," or "confusing." Let them supply the adjectives.

- Probe for the "why." When a user does something you didn't expect, your go-to follow-up should be a simple, "Can you tell me what you're thinking there?"

Mastering the Awkward Silence

This is probably the hardest skill to learn but it's easily the most powerful tool you have. When a participant pauses, every instinct you have will scream, "Jump in! Help them! Fill the silence!"

Don't do it. Just count to five in your head.

That "awkward" pause is often where the real insights are born. It’s the space where users move past their initial reactions and start to verbalize the real reason they're stuck or what truly delights them.

Let them struggle a little. The point isn't for them to complete the task successfully; it's for you to understand all the reasons they might fail. Watching someone hunt for a feature in Zemith's Document Assistant for 30 seconds is a hundred times more valuable than you pointing it out to them in five.

Your Session Script: From Welcome to Wrap-Up

While you absolutely want the conversation to feel natural and unscripted, you should never, ever go into a session without a script. A script is your safety net. It makes sure you cover every key point, treat all participants consistently, and don't forget crucial things like asking for permission to record.

Here’s a simple structure you can borrow and adapt:

- The Warm Welcome: Start by introducing yourself and thanking them for their time. Immediately reassure them there are no right or wrong answers—you're just interested in their perspective.

- Setting the Scene: Give them just enough context. For example, "Imagine you're a content creator logging into Zemith to start a new blog post..."

- The Tasks: Introduce the tasks one at a time. This is where you remind them to think aloud as much as possible.

- Probing Questions: Have a few of these in your back pocket to dig deeper. Think: "What made you click there?" or "What are you looking for on this screen?"

- The Wrap-Up: Thank them again profusely, ask for any final thoughts, and clearly explain the next steps regarding their feedback and their incentive.

Recording and Note-Taking

Look, you simply can't moderate effectively and take detailed notes at the same time. It's impossible. Your focus needs to be 100% on the participant—their words, their hesitations, their body language.

That's why recording your sessions (with consent!) is a must. It's your backup. But a recording alone isn't enough. The ideal setup involves a dedicated note-taker. This person's job is to be a fly on the wall, capturing key quotes, observing non-verbal cues, and time-stamping critical events. They’re there to document the "why" behind every click. For some great tips on how to structure this, check out our guide on how to take meeting notes effectively.

Your notes should capture observations, not your interpretations. Instead of writing, "The user was confused," write, "User said, 'I'm not sure what this button does' and hesitated for 15 seconds before clicking." That small shift in detail is what turns a messy pile of notes into solid, actionable data.

Turning User Feedback into Actionable Insights

Alright, the tests are done. You've survived the awkward silences, taken a mountain of notes, and now you’re staring at what looks like a chaotic explosion of observations, quotes, and video clips. It can feel like you've just dumped a 1,000-piece jigsaw puzzle onto the table without the box top. So, now what?

This is where the real work begins. Raw data is just noise; your job is to find the music in it. It’s all about turning that messy feedback into a clear story with actionable next steps that your team can actually get excited about.

Don't sweat it. You don't need a Ph.D. in data science to make sense of your findings. A few simple, powerful synthesis methods are all you need.

From Chaos to Clarity with Affinity Mapping

One of the best ways to get started is with affinity mapping—which is really just a fancy term for grouping sticky notes. It’s a beautifully simple, visual way to spot recurring patterns and themes in all that qualitative data you just collected.

Here’s the basic flow:

- Get everything out: Go through your notes and recordings. Write down every single interesting observation, quote, or pain point on its own sticky note. Seriously, everything.

- Group 'em up: Stick all the notes on a wall or a digital whiteboard like Miro. Without overthinking it, just start moving notes that feel related next to each other. A user who said, "I couldn't find the save button," belongs next to another who frantically clicked everywhere but the save icon.

- Give your groups a name: Once you have a few solid clusters, give each one a name. These names will become your core themes, like "Confusing Navigation" or "Unclear Button Labels."

This whole process forces you to climb out of the weeds and see the bigger picture. It’s a foundational skill for anyone working with user feedback. For a deeper dive, our guide on how to analyze qualitative data is a great place to go next.

Blending the 'What' with the 'Why'

The most powerful insights come from combining your qualitative feedback with quantitative metrics. The numbers tell you what happened, but the user quotes and observations tell you why it happened. This one-two punch is your secret weapon for building a rock-solid case for change.

Let’s say your data shows that 75% of users failed to add a new document to a project. That’s an alarming stat, but on its own, it's just a number.

Now, you layer in the qualitative gold from your notes:

- One user said, "I thought I had to create a project first, but I couldn't figure out how."

- Another noted, "The 'New Document' button was grayed out. I don't know why."

- You watched a third person just keep dragging the file onto the screen, hoping something would happen.

The Insight: The numbers flag the fire, but the user quotes tell you exactly where the smoke is coming from. Combining "Task failure rate was 75%" with direct user struggles creates a story that’s impossible for your team to ignore.

This is exactly the kind of analysis that's driving growth everywhere. The usability testing market has blown up, dominating crowdsourced markets with a 25.1% share and an expected 11.6% CAGR to 2030. That growth is fueled by companies realizing this blend of data is the key to building better products. You can read more about this trend in the usability testing service market.

Prioritizing and Reporting Your Findings

You’ve found the patterns and pieced together the story. The final step is to frame your findings as a prioritized list of recommendations your team can actually do something about. A 50-page report that no one reads is a waste of everyone's time. A one-page summary with clear, prioritized actions? That's gold.

A simple way to prioritize issues is to map them out based on two things: Frequency (how many people ran into this?) and Impact (how badly did it block them?).

| Priority Level | Frequency | Impact | Example |

|---|---|---|---|

| P1 - Critical | High | High | All 5 users couldn't complete the checkout process. |

| P2 - Serious | Low | High | One user found a bug that crashed the app. |

| P3 - Minor | High | Low | Most users commented that a button color was "ugly." |

| P4 - Trivial | Low | Low | One user misspelled a word in a text field. |

Focus your energy on the P1 and P2 issues. These are the showstoppers, the things that are actively costing you users and revenue. The "ugly button" can wait.

When you present your findings, lead with the human element. Kick things off with a powerful video clip of a user struggling or a quote that perfectly captures their frustration. This makes the data feel real and urgent. Then, walk them through your prioritized recommendations and open the floor for a conversation about solutions. Your hard work has just laid the foundation for real improvements that users will actually notice and appreciate.

A Few Lingering Usability Testing Questions

Even with a solid plan, a few questions always seem to hang in the air. That’s perfectly normal. I've heard these pop up time and time again from teams just starting out with usability testing.

Let's clear the air and tackle some of the most common head-scratchers so you can move forward with confidence.

"How Many Users Do I Actually Need to Test With?"

This is the big one, and the answer almost always surprises people: five.

No, that's not a number I just pulled out of a hat. It comes from foundational research by the Nielsen Norman Group. They discovered that with just five users, you're likely to uncover about 85% of the core usability problems in your design.

Think about it this way: after the fifth person, you'll find yourself watching users trip over the same issues repeatedly. You've hit the point of diminishing returns.

The real pro move is to run small, iterative tests. Grab five users, find the biggest pain points, and fix them. Then, rinse and repeat with another five people on the new-and-improved version. It’s a much smarter (and cheaper) way to work than pinning all your hopes on one massive, expensive test.

"What’s the Real Difference Between Usability Testing and UAT?"

This is a fantastic question because on the surface, they sound like they could be cousins. But in reality, their goals are worlds apart. It’s like the difference between a food critic and a health inspector.

Usability Testing is all about the experience. Can someone actually use this thing without getting frustrated? Is it intuitive? This kind of testing should happen all through the design process to make sure you’re building something people will enjoy using.

User Acceptance Testing (UAT) comes at the very end of the line. Its job is to answer one simple question: "Did we build what the business or the contract said we would build?" It's the final sign-off, a last-minute check to ensure all the technical and business requirements have been met before launch.

So, one is asking, "Is it easy to use?" The other is asking, "Does it do what we promised it would do?"

"Can I Pull This Off on a Shoestring Budget?"

You absolutely can. Don't let anyone tell you that you need a fancy lab with two-way mirrors to get good feedback. A scrappy, well-planned test is a million times better than no test at all.

There are so many affordable remote testing tools that can handle screen and audio recording for you. You can find willing participants in your own network, on social media groups, or in online communities related to your product.

And you don't always need to offer cash. Gift cards, company swag, or even a free subscription to your product can work wonders as an incentive. Seriously, a tight budget is not an excuse to skip talking to your users.

"What Are the Most Common Mistakes I Should Avoid?"

Most of the big slip-ups happen right in the middle of the testing session. They're easy mistakes to make when you're just starting out, but they're also easy to dodge once you know what they are.

Here are the top three I see all the time:

- Asking Leading Questions: It's so tempting to nudge a user by asking something like, "That was pretty straightforward, right?" You’re basically telling them the answer you want to hear. Instead, keep it neutral and open-ended. Ask, "How would you go about saving your work from this screen?"

- Jumping In to "Rescue" the User: Your natural instinct is to help someone the second they start to struggle. Fight that urge! The struggle is where the gold is. Watching someone get stuck tells you exactly where your design is failing them.

- Testing with the Wrong People: If you're building sophisticated software for accountants, getting feedback from college students is going to be pretty useless. Your test results are only as good as the people you recruit. Make sure they’re a true reflection of who will actually be using your product every day.

Ready to put these insights into practice and build a product people love? Zemith brings all your AI tools into one seamless workspace. From brainstorming with our AI Whiteboard to analyzing documents with our Document Assistant, we're building the future of productivity—and we need feedback from users like you. Start simplifying your workflow and see how much faster you can work. Explore all the features at https://www.zemith.com.

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

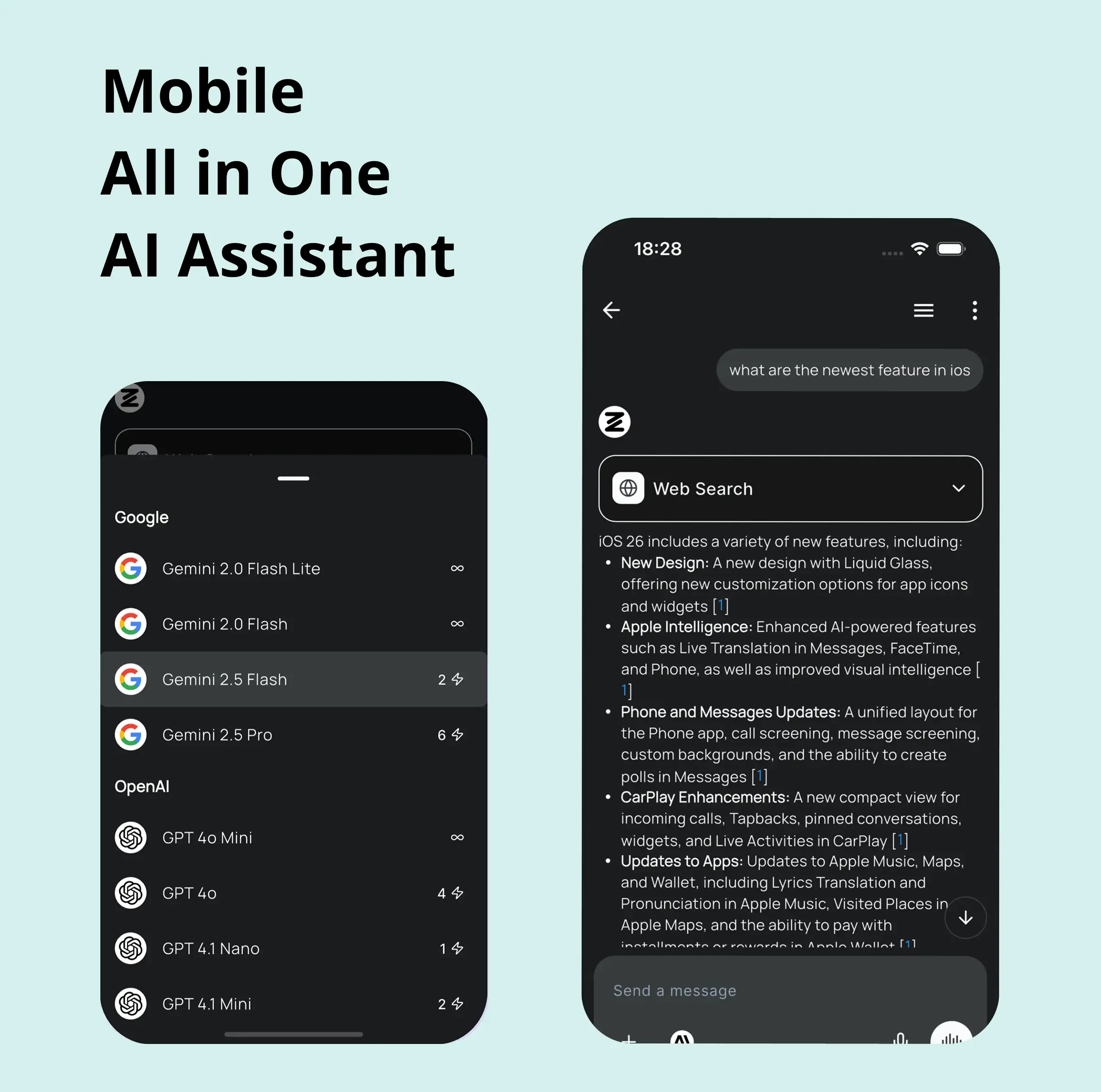

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

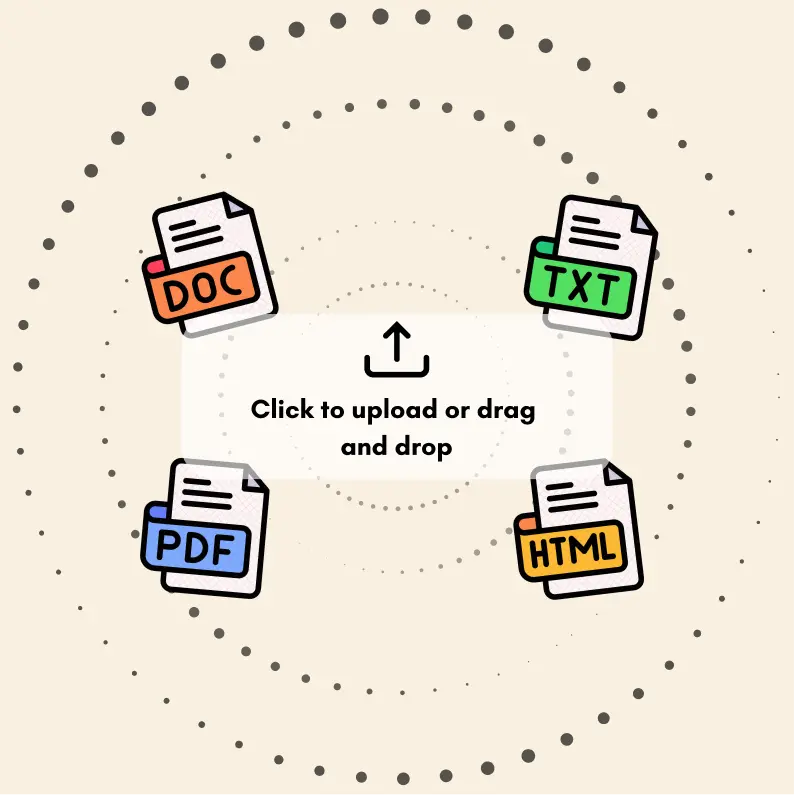

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

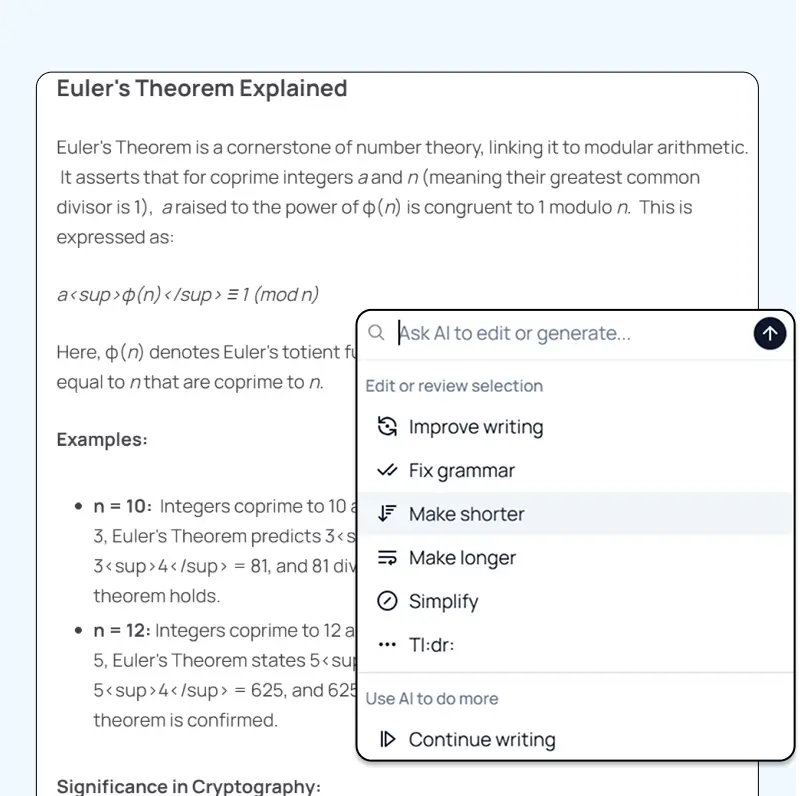

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

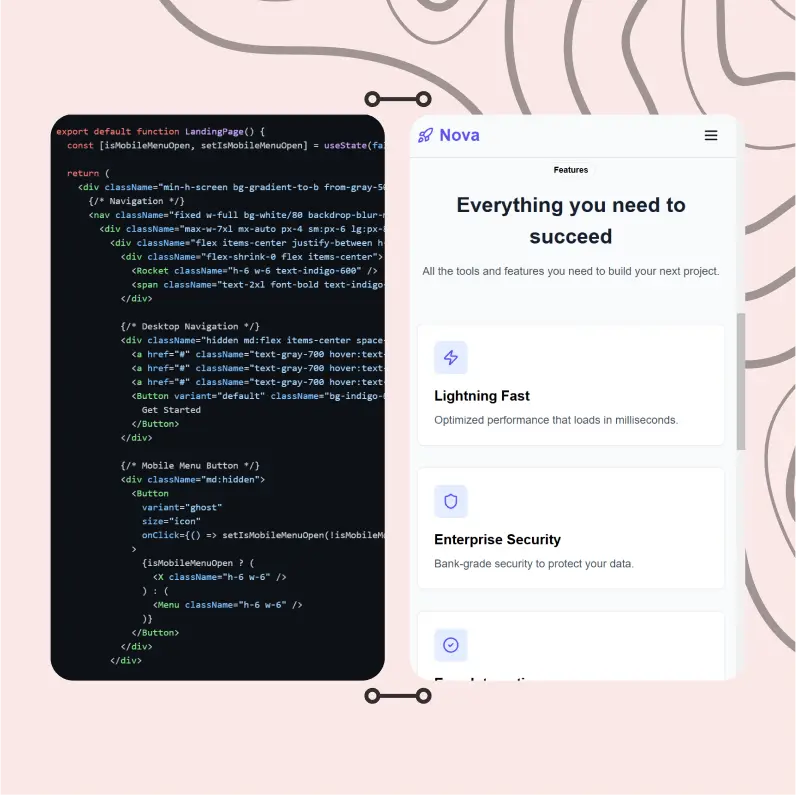

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...