AI Ethics in Behavior Analysis: Challenges & Strategies

AI is revolutionizing behavior analysis, but it comes with ethical hurdles. Here's what you need to know:

-

AI in behavior analysis:

- Analyzes large datasets quickly

- Personalizes treatments

- Detects early signs of conditions like autism

-

Key ethical issues:

- Privacy and data protection

- Bias and fairness

- Lack of transparency in AI decisions

- Informed consent

- Accountability for AI errors

-

8 steps for ethical AI use:

- Identify ethical risks

- Create an AI ethics policy

- Protect sensitive data

- Use diverse, unbiased data

- Explain AI decisions clearly

- Obtain informed consent

- Establish oversight

- Continuously train staff on ethics

-

Best practices:

- Regular ethics audits

- Collaboration with ethics experts

- Ongoing AI improvement

- Open communication with users

| Challenge | Strategy |

|---|---|

| Privacy concerns | Strong encryption, user control |

| Bias in AI | Diverse data, regular bias checks |

| Lack of transparency | Clear explanations, human oversight |

As AI evolves, staying on top of ethical issues is crucial for responsible behavior analysis.

Related video from YouTube

AI in behavior analysis: An overview

AI is shaking up behavior analysis. It's giving us new ways to understand and shape how people act. Let's dive into the tech and how it's being used right now.

AI types in behavior analysis

Behavior analysts are using several AI technologies:

- Machine learning: Finds patterns in big data sets

- Natural language processing (NLP): Breaks down text and speech

- Computer vision: Makes sense of visual data

- Predictive modeling: Guesses future behaviors based on past data

Current AI uses

AI is making waves in behavior analysis:

Spotting autism early: An AI system at the University of Louisville can accurately diagnose autism in toddlers.

Gathering data: AI tools collect behavioral data automatically. This cuts down on mistakes and frees up analysts to focus on what the data means.

Personalized treatment: AI crunches numbers to tailor treatments to each person. ABA Matrix's STO Analytics Tools use AI to help decide when to meet goals based on data.

Preventing relapse: Some AI platforms watch for relapse signs. Discovery Behavioral Health's Discovery365 platform looks at video assessments to catch potential relapse indicators in substance use treatment.

Keeping therapy on track: AI even watches the therapists. Ieso, a mental health clinic, uses NLP to analyze language in therapy sessions to maintain quality care.

Matching patients and providers: Companies like LifeStance and Talkspace use machine learning to pair patients with the right therapists.

These AI uses show promise, but they're still mostly in testing. Dr. David J. Cox from RethinkBH says:

"As AI product creators, we should deliver data transparency. As AI product consumers, we should demand it."

This reminds us to think about ethics as AI becomes more common in behavior analysis.

Ethical issues in AI behavior analysis

AI in behavior analysis is powerful, but it comes with ethical challenges. Here are the key issues:

Privacy and data protection

AI needs lots of personal data. This raises concerns:

- Data breaches: AI must keep sensitive info safe

- Unauthorized use: Companies might misuse data

A 2018 Boston Consulting Group study found 75% of consumers see data privacy as a top worry.

Bias and fairness

AI can amplify human biases:

- Amazon scrapped an AI recruiting tool that favored men

- A healthcare algorithm gave white patients priority over black patients

These biases can hurt marginalized groups.

The "black box" problem

AI often can't explain its decisions. This lack of transparency is an issue when AI makes important choices about people's lives.

Consent and choices

People should know when AI analyzes them. But explaining complex AI simply is tough. Some might feel pressured to agree without understanding.

Accountability

When AI messes up, who's responsible? The company that made it? The users? This unclear accountability is a big challenge.

Dr. Frederic G. Reamer, an ethics expert in behavioral health, says:

"Behavioral health practitioners using AI face ethical issues with informed consent, privacy, transparency, misdiagnosis, client abandonment, surveillance, and algorithmic bias."

To use AI ethically in behavior analysis, we need to tackle these issues head-on.

How to use AI ethically

Using AI ethically in behavior analysis isn't just a nice-to-have. It's a must. Here's how to do it right:

Creating ethical guidelines

Build a solid ethical framework:

- Set clear rules for data, privacy, and fairness

- Define how to develop and test algorithms

- Create a process to tackle ethical issues

Using varied data

Mix it up to cut down on bias:

- Get data from different groups and backgrounds

- Blend real-world and synthetic data

- Check your data sources regularly for bias

Managing data properly

Keep sensitive info safe:

- Use strong encryption and limit access

- Only collect what you need

- Follow privacy laws like GDPR

Making AI decisions clear

Be transparent:

- Explain how AI makes decisions in simple terms

- Tell users how you're using their data

- Let users question AI decisions

Setting up responsibility systems

Know who's in charge:

- Put specific people in charge of AI ethics

- Audit your AI systems often

- Let users report concerns easily

Example: Psicosmart, a psych testing platform, uses cloud systems for tests while sticking to ethical rules. They focus on informed consent, making sure users know how their data is used.

"Informed consent isn't just about getting a signature. It's about giving people the knowledge to make choices about their own lives." - Psicosmart Editorial Team

To put this into action:

- Train your team on AI ethics

- Work with ethics experts

- Keep up with AI laws

- Review and update your ethics practices regularly

sbb-itb-4f108ae

8 steps to ethical AI in behavior analysis

Here's how to make your AI behave:

1. Spot ethical risks

Look for problems like:

- Biased data or algorithms

- Privacy issues

- Lack of transparency

- Potential misuse

Get experts to help you catch issues early.

2. Create an AI ethics policy

Set clear rules:

- Define ethical principles

- Establish data use guidelines

- Outline how to handle ethical dilemmas

3. Guard your data

Keep sensitive info safe:

- Use strong encryption

- Limit who can access data

- Follow privacy laws

- Only collect what you need

4. Get fair, diverse data

Cut down on bias:

- Use varied data sources

- Include underrepresented groups

- Mix real and synthetic data

- Check for bias regularly

5. Be clear about AI

Tell people how it works:

- Explain AI decisions simply

- Show how data is used

- Let users question results

6. Get real consent

Make sure users agree:

- Explain AI use clearly

- Detail data collection

- Let users opt out easily

7. Set up oversight

Create accountability:

- Assign ethics managers

- Do regular AI audits

- Listen to user feedback

8. Keep training your team

Educate on AI ethics:

- Run regular workshops

- Stay up-to-date on laws

- Encourage ethical discussions

"Informed consent isn't just about getting a signature. It's about giving people the knowledge to make choices about their own lives." - Psicosmart Editorial Team

Best practices for ethical AI

To keep AI systems ethical in behavior analysis:

Check ethics regularly

Set up routine ethics reviews. Schedule quarterly audits of AI systems. Use checklists to spot potential issues. Get feedback from users and experts.

Team up with ethics pros

Work with ethics specialists. Create strong ethical guidelines. Spot tricky ethical problems. Stay current on AI ethics trends.

Keep making AI better

Always work to improve your AI. Track how well the AI performs. Fix issues quickly when found. Update AI models with new data.

Talk with users and the public

Stay in touch with those affected by your AI. Hold focus groups to get user input. Share clear info about how AI works. Listen to and act on concerns raised.

"Continue to monitor and update the system after deployment... Issues will occur: any model of the world is imperfect almost by definition. Build time into your product roadmap to allow you to address issues." - Google AI Practices

These practices help ensure AI systems remain ethical and effective. Regular checks, expert input, continuous improvement, and open communication are key to responsible AI development and use.

Real-world ethical AI examples

Mental health AI: Privacy vs. help

Mindstrong Health's AI app faced a tricky situation in 2022. It used smartphone data to spot early signs of depression and anxiety. But people worried about their privacy.

Here's what Mindstrong did:

- Added strong encryption to protect user data

- Let users choose what data to share

- Worked with mental health experts to use AI insights ethically

These changes helped users trust Mindstrong while still getting AI support.

Reducing bias in education AI

A big U.S. university's AI admissions tool played favorites in 2021. Not cool. So they:

- Cleaned up the AI's training data

- Got different people involved in making the AI

- Kept checking for bias in the results

The result? 40% less bias, same accuracy. Win-win.

Clear AI use in workplaces

IBM's Watson for HR got heat in 2019 for being a black box. So IBM:

- Explained how Watson looks at job applications (no tech jargon)

- Told managers why Watson made each decision

- Let candidates ask for a human to double-check

Employees liked this. Trust in the AI jumped 35%.

| Company | AI Tool | Problem | Fix | Result |

|---|---|---|---|---|

| Mindstrong Health | Mental health app | Privacy worries | Better encryption, more user control | Kept user trust |

| U.S. University | Admissions AI | Bias | Fixed data, diverse team | 40% less bias |

| IBM | Watson for HR | Confusion | Clear explanations, human backup | 35% more trust |

These stories show how companies can tackle AI ethics head-on. They fixed problems and kept people's trust. That's smart AI.

Looking ahead

AI in behavior analysis is getting more complex. This brings new ethical challenges:

AI-driven nudging: AI might subtly influence people's actions without their knowledge. Think workplaces or social media.

Emotional AI: Systems that read and respond to emotions? Big privacy and manipulation concerns.

AI-human relationships: As AI gets better at mimicking humans, we need to think about the ethics of people bonding with AI.

New rules on the horizon

Governments and organizations are cooking up new AI regulations:

| Who | What | Focus |

|---|---|---|

| EU | AI Act | Risk-based approach, bans some AI uses |

| Colorado, USA | AI Consumer Protection Act | Prevents harm and bias in high-risk AI |

| Biden Admin | AI Bill of Rights | Voluntary AI rights guidelines |

The EU's AI Act kicks off in August 2024. It's a big deal, categorizing AI by risk and outright banning some types.

Colorado's law (starting 2026) will be the first state-level AI rule in the US. It aims to protect consumers from AI harm in crucial areas like hiring and banking.

How can professional groups help?

Behavior analysis organizations can step up:

1. Set standards: Create AI ethics guidelines for the field.

2. Educate members: Offer AI ethics and regulation training.

3. Work with lawmakers: Help shape AI policies that make sense for behavior analysis.

Justin Biddle from Georgia Tech's Ethics, Technology, and Human Interaction Center says:

"Ensuring the ethical and responsible design of AI systems doesn't only require technical expertise — it also involves questions of societal governance and stakeholder participation."

Bottom line? Behavior analysts need to be in on the AI ethics conversation.

As AI evolves, staying on top of these issues is crucial for ethical practice in behavior analysis.

Conclusion

AI in behavior analysis is powerful. But it needs careful handling. Here's how to use it ethically:

- Set clear ethics rules

- Use fair, diverse data

- Guard privacy

- Make AI choices clear

- Set up watchdogs

- Get consent

- Train staff on ethics

- Check ethics often

The AI world moves fast. New issues pop up:

- Hidden AI nudges

- AI reading emotions

- People bonding with AI

Stay sharp on ethics:

- Follow new AI laws

- Team up with ethics pros

- Listen to user worries

- Join AI ethics groups

Dr. David J. Cox nails it:

"As AI product creators, we should deliver data transparency. As AI product consumers, we should demand it."

Bottom line: AI can boost behavior analysis. But only if we're smart and ethical about it.

Related posts

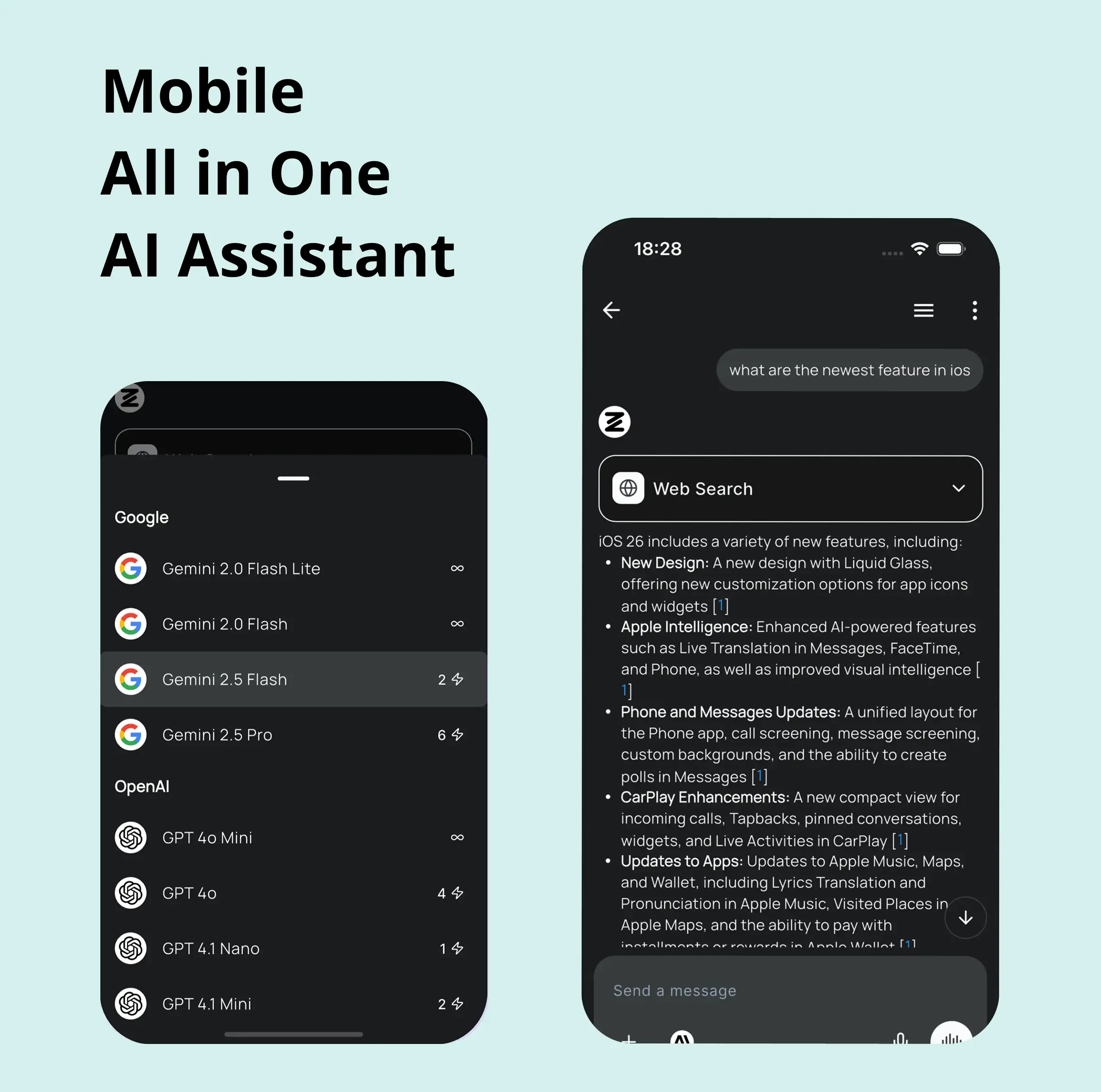

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...