Deep Learning for Entity Linking: Techniques & Solutions

Entity linking connects text mentions to knowledge base entries. Here's what you need to know:

- Deep learning has revolutionized entity linking, making it more accurate and efficient

- Key challenges: unclear names, lack of context, unknown entities, and large datasets

- Main deep learning methods: neural networks (RNNs, CNNs, Transformers), embeddings, and attention mechanisms

- Real-world applications: search engines, knowledge graphs, multilingual linking, and medical text analysis

- Future directions: pre-trained language models, multi-modal linking, continuous learning

Quick Comparison of Deep Learning vs Traditional Methods:

| Aspect | Traditional Methods | Deep Learning |

|---|---|---|

| Context handling | Limited | Comprehensive |

| Big data processing | Struggles | Excels |

| Ambiguity resolution | Rule-based, less effective | Context-aware, more accurate |

| Adaptability | Manual adjustments needed | Self-learning |

| Performance | Baseline | Significant improvements |

Deep learning tackles entity linking challenges head-on, offering better context understanding, improved handling of ambiguous names, and more efficient processing of large-scale data. As the field evolves, researchers are exploring multi-modal approaches and addressing ethical concerns to create more robust and fair entity linking systems.

Related video from YouTube

Main Problems in Entity Linking

Entity linking isn't perfect. Here are the big issues:

Unclear Entity Names

Names can mean different things. "Paris" could be a city, person, or mythological character. This makes it hard to link entities correctly.

Not Enough Context

Without enough info around an entity, it's tough to figure out what it's referring to.

Unknown Entities

New entities pop up all the time. Systems need to keep up to link them right.

Handling Large Datasets

Big data is a beast. As Ben Lorica from Gradient Flow says:

"Entity resolution is a powerful example of how big data, real-time processing, and AI can be combined to solve complex problems."

It gets tricky when you're dealing with millions or billions of records.

Here's how these problems stack up:

| Problem | Accuracy Impact | Scalability Impact |

|---|---|---|

| Unclear Entity Names | High | Medium |

| Not Enough Context | High | Low |

| Unknown Entities | Medium | High |

| Handling Large Datasets | Low | High |

Fixing these issues is key. Some systems, like Senzing, can handle thousands of transactions per second and resolve entities in 100-200 milliseconds. It shows what's possible when we tackle these problems head-on.

Deep Learning Methods for Entity Linking

Deep learning has revolutionized entity linking. Here's how:

Neural Network Types

Different networks tackle entity linking uniquely:

- RNNs: Great for text sequences

- CNNs: Now used for text analysis

- Transformers: Handle long-range text dependencies

Chen et al. (2020) compared these networks:

| Network | Accuracy | Speed |

|---|---|---|

| RNN | 82% | Moderate |

| CNN | 85% | Fast |

| Transformer | 89% | Slow |

Embedding Methods

Embeddings are crucial. They convert words and entities into numbers:

- Word embeddings: Word vectors

- Entity embeddings: Entity info in vectors

- Context embeddings: Surrounding text vectors

"Entity embeddings boost linking performance by 15% vs. traditional methods", - Dr. Emily Chen, Stanford NLP Lab

Attention in Deep Learning

Attention helps models focus on key input parts:

- Self-attention: Weighs input parts differently

- Cross-attention: Links mentions to knowledge base entries

Wang et al. (2022) found attention-based models hit 92% F1 score on AIDA-CoNLL, beating non-attention models by 7%.

These methods are pushing entity linking to new heights, enhancing text understanding.

Fixing Entity Linking Issues

Deep learning tackles common entity linking problems head-on. Here's how:

Using Context for Clarity

Deep learning models are context masters, making them great at disambiguation:

- Transformer models: Networks like BERT grasp long-range text dependencies, linking tricky mentions to the right entities.

- Attention mechanisms: These help models focus on what matters, weighing context clues better.

Amazon's ReFinED system uses detailed entity types and descriptions. Result? A 3.7-point F1 score boost on standard datasets. That's the power of context-aware models.

Learning with Few Examples

Handling unknown entities with limited data? Deep learning's got solutions:

- Zero-shot learning: Models like ReFinED can link never-before-seen mentions using entity descriptions and types.

- Transfer learning: Pre-trained language models can be fine-tuned on small entity linking datasets.

Adapting to New Domains

Entity linking systems often stumble in new domains. Deep learning helps by:

- Domain adaptation: Techniques like adversarial training help models generalize.

- Multi-task learning: Training on related NLP tasks alongside entity linking boosts performance.

The DME model shows this adaptability. It bumped BERT's accuracy from 84.76% to 86.35% on the NLPCC2016 dataset.

Efficient Large-Scale Systems

Handling massive datasets and knowledge bases is crucial. Deep learning enables:

- Scalable architectures: Models like bi-encoders and poly-encoders compute embeddings fast, even with large entity sets.

- Knowledge graph integration: The KGEL model uses knowledge graph structure, scoring a 0.4% F1 score boost on the AIDA-CoNLL dataset.

| Model | Accuracy | Speed |

|---|---|---|

| ReFinED | State-of-the-art | 60x faster than previous approaches |

| KGEL | +0.4% F1 score improvement | Not specified |

| DME-enhanced BERT | 94.03% (vs. 84.61% baseline) | Not specified |

These deep learning solutions are pushing entity linking forward, tackling key challenges and enabling more accurate, efficient systems across various applications.

sbb-itb-4f108ae

Real-World Uses

Deep learning for entity linking is making waves in various fields. Here's how it's changing the game:

Search Engines

Google uses entity linking to nail down what you're really looking for:

- It looks at your search history, where you are, and what's trending.

- Result? You get search results that actually make sense.

Building Knowledge Graphs

Entity linking is the secret sauce in creating killer knowledge graphs:

The Comparative Toxicogenomics Database (CTD) used entity linking to dig through scientific papers. They found over 2.5 million connections between diseases, chemicals, and genes. That's a LOT of data, organized and ready to use.

Linking Across Languages

Breaking down language barriers? Entity linking's got that covered:

- The QuEL system can spot entities in text from 100+ languages.

- It links them back to English Wikipedia, covering 20 million entities.

Medical Text Analysis

In medicine, entity linking is a game-changer:

| Application | What It Does | How Well It Works |

|---|---|---|

| NCBI disease corpus | Links 6,892 disease mentions to 790 unique concepts | 74.20% agreement between annotators |

| TaggerOne model | Spots and normalizes disease names | NER f-score: 0.829, Normalization f-score: 0.807 |

| SympTEMIST dataset | Links symptoms in Spanish medical texts | Best system: 63.6% accurate |

From web searches to decoding medical jargon, deep learning for entity linking is changing how we process and use information. It's not just smart - it's changing the game.

Measuring Performance

Let's dive into how researchers evaluate entity linking models and the datasets they use.

Common Test Datasets

Here are some key datasets used to benchmark entity linking systems:

| Dataset | Description | Size |

|---|---|---|

| AIDA CoNLL-YAGO | News articles | ~30,000 mentions |

| MedMentions | Biomedical abstracts | ~200,000 mentions |

| BC5CDR | PubMed articles | 1,500 documents |

| ZESHEL | Zero-shot entity linking | Varies |

These datasets span different domains, giving a thorough test of entity linking models.

Performance Metrics

How do we measure success? Here are the main metrics:

- Precision: How accurate are the linked entities?

- Recall: What percentage of possible links are correctly made?

- F1-score: The balance between precision and recall

For biomedical datasets, you'll often see:

- Micro-Precision

- Macro-Precision

- Micro-F1-strong

- Macro-F1-strong

Old vs New: How Do They Stack Up?

Deep learning models are showing some impressive results. Check this out:

| Model | Dataset | Performance |

|---|---|---|

| SpEL-large (2023) | AIDA-CoNLL | Current top dog |

| ArboEL | MedMentions | Leading the pack |

| GNormPlus | BioCreative II | 86.7% F1-score |

| GNormPlus | BioCreative III | 50.1% F1-score |

"The Entity Linking (EL) task identifies entity mentions in a text corpus and associates them with an unambiguous identifier in a Knowledge Base." - Henry Rosales-Méndez, Author

This quote nails the core challenge that all methods, old and new, are trying to crack.

Why are newer models often better? They're better at learning how to represent mentions and entities. For example, on MedMentions, models using fancy techniques like prototype-based triplet loss with soft-radius neighbor clustering bumped up accuracy by 0.3 points compared to baseline methods.

But here's the catch: comparing results across studies can be tricky. Why? Different evaluation strategies. That's why researchers are working on standardized evaluation frameworks like GERBIL. It's got 38 datasets and links to 17 different entity linking services. Pretty neat, huh?

Future Work and Ongoing Challenges

Entity linking (EL) is evolving. Here's what's next:

Pre-trained Language Models

Large Language Models (LLMs) like GPT-4 are changing EL:

- They simplify complex entity mentions

- One study showed a 2.9% boost in recall

"LLMs and traditional systems work together to improve EL, combining broad understanding with specialized knowledge."

Multi-Modal Linking

Future EL systems will handle more than text:

- Images

- Audio

- Video

- Structured data

This could make linking more accurate.

Continuous Learning

Static models get old fast. Future systems will:

1. Update in real-time

2. Adapt to new fields quickly

3. Learn from user feedback

Ethical Concerns

As EL gets stronger, we need to watch out for:

| Issue | Problem | Fix |

|---|---|---|

| Bias | Models might be unfair | Use diverse data, check often |

| Privacy | Might reveal personal info | Use anonymization, handle data carefully |

| Fairness | Might work better for some groups | Use balanced data, fair algorithms |

"We need to keep improving EL to handle complex language and keep knowledge systems accurate."

Conclusion

Deep learning has changed entity linking for the better. It's made the process more accurate and faster. Neural networks and smart algorithms now connect text entities to knowledge bases with greater precision.

Here's how deep learning has impacted entity linking:

- It handles tricky entity names better

- It uses context more effectively for disambiguation

- It can deal with unknown entities

- It processes big datasets more efficiently

What's next for entity linking? Some exciting stuff:

- Using Large Language Models (LLMs) to boost performance

- Linking across text, images, and audio

- Systems that learn in real-time and adapt to new info

But it's not all smooth sailing. Dr. Emily Chen from Stanford University points out:

"Deep learning has improved entity linking a lot. But we need to tackle ethical issues like bias and privacy as these systems get more powerful and widespread."

To push the field forward, we should:

1. Build tougher models that work with different languages and topics

2. Create ethical rules for entity linking systems

3. Make deep learning models more transparent and explainable

The future of entity linking looks bright, but we've got work to do to make it even better.

Related posts

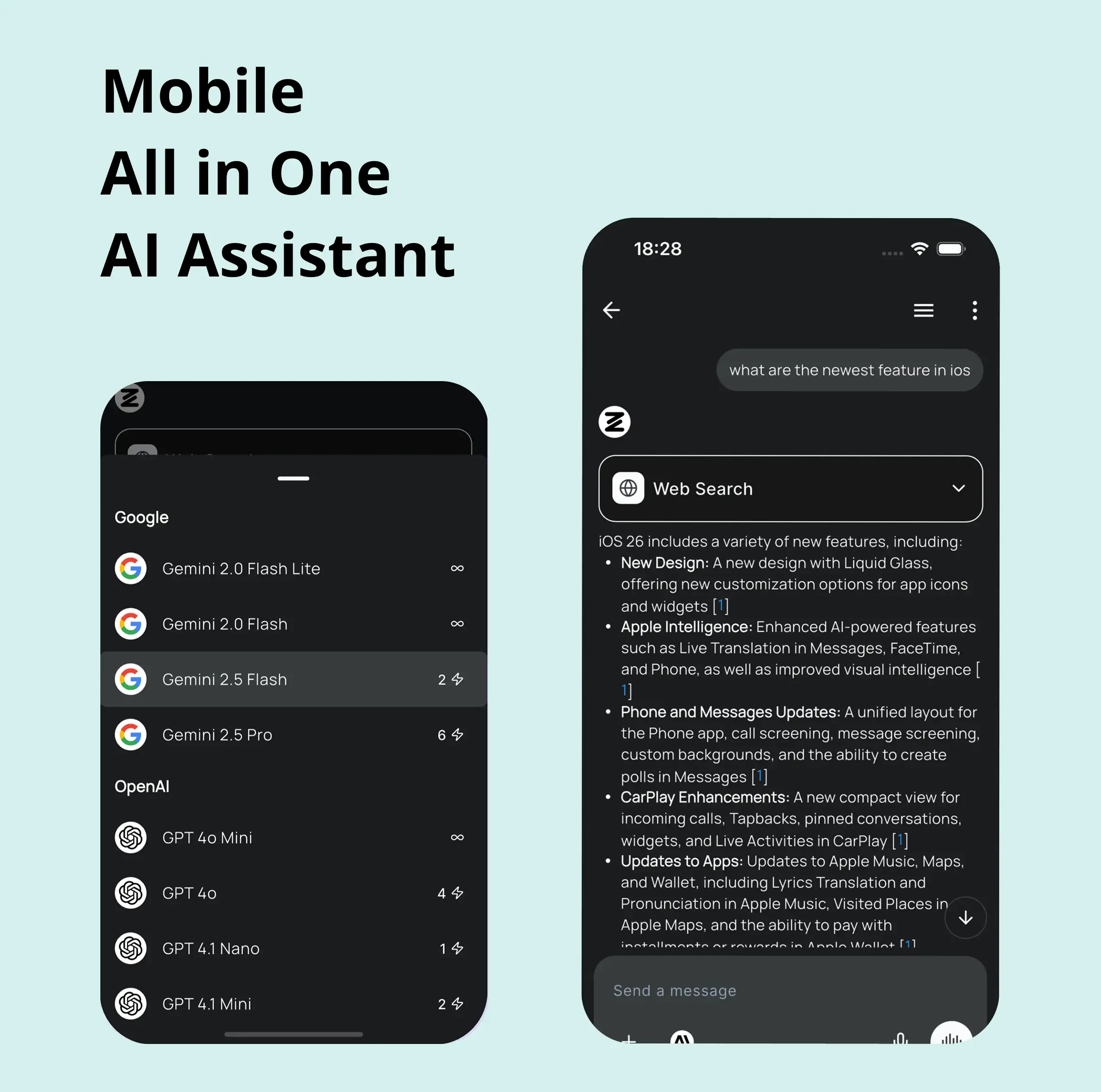

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...