7 Qualitative Research Analysis Techniques for 2025

Discover the top qualitative research analysis techniques. This guide covers thematic, grounded theory, and more to elevate your data interpretation.

Qualitative research is about understanding the 'why' behind the numbers. While quantitative data tells you the what, where, and when, qualitative insights reveal the rich, complex stories of human experience. But how do you systematically turn hours of interviews, pages of open-ended survey responses, or detailed field notes into coherent, meaningful findings? The answer lies in mastering the right qualitative research analysis techniques.

This comprehensive guide is designed to be your practical roadmap. We will explore seven powerful methods, breaking down their core principles, specific applications, and actionable steps to get you started. From identifying recurring patterns with Thematic Analysis to building new theories from your data with Grounded Theory, you'll gain a clear understanding of how to choose and apply the best approach for your research goals.

We'll move beyond abstract theory to provide a clear, actionable framework for each technique. This article will equip you with the tools to analyze your data with rigor and depth, ensuring you can confidently extract the valuable narratives hidden within. For those looking to accelerate this process, we'll also touch on how integrated AI platforms like Zemith can help manage and codify your data, allowing you to focus on the critical work of interpretation and insight generation. Whether you are a researcher, marketer, or content creator, this roundup will help you unlock the powerful stories your data has to tell.

1. Thematic Analysis

Thematic analysis is one of the most foundational and flexible qualitative research analysis techniques. It provides a rich, detailed, and complex account of data by identifying, analyzing, and reporting patterns, or "themes." Popularized by psychologists Virginia Braun and Victoria Clarke, this method is highly accessible, making it an excellent starting point for researchers new to qualitative inquiry. It is not tied to a specific theoretical framework, allowing it to be adapted for various research questions and disciplines.

The core of thematic analysis involves a systematic process of reading through data, applying codes to segments of text, and then grouping these codes into broader, overarching themes that capture essential meanings relevant to your research question.

When to Use Thematic Analysis

This approach is particularly powerful when you need to understand a set of experiences, views, or behaviors across a qualitative dataset. It’s ideal for answering broad research questions like "What are the main challenges faced by remote workers?" or "How do consumers perceive our brand's sustainability efforts?"

- Exploratory Research: When you want to explore and summarize the key features of a large body of data without a pre-existing coding frame.

- Summarizing Participant Perspectives: It is perfect for capturing the similarities and differences in participants' viewpoints on a specific topic.

- Policy and Practice Recommendations: By identifying key themes, researchers can develop actionable insights for organizational change or policy development.

How Thematic Analysis Works: A Step-by-Step Overview

While Braun and Clarke outline a six-phase process, the initial stages are crucial for building a solid foundation. The goal is to move from a vast, unstructured dataset to a structured, insightful narrative supported by clear themes.

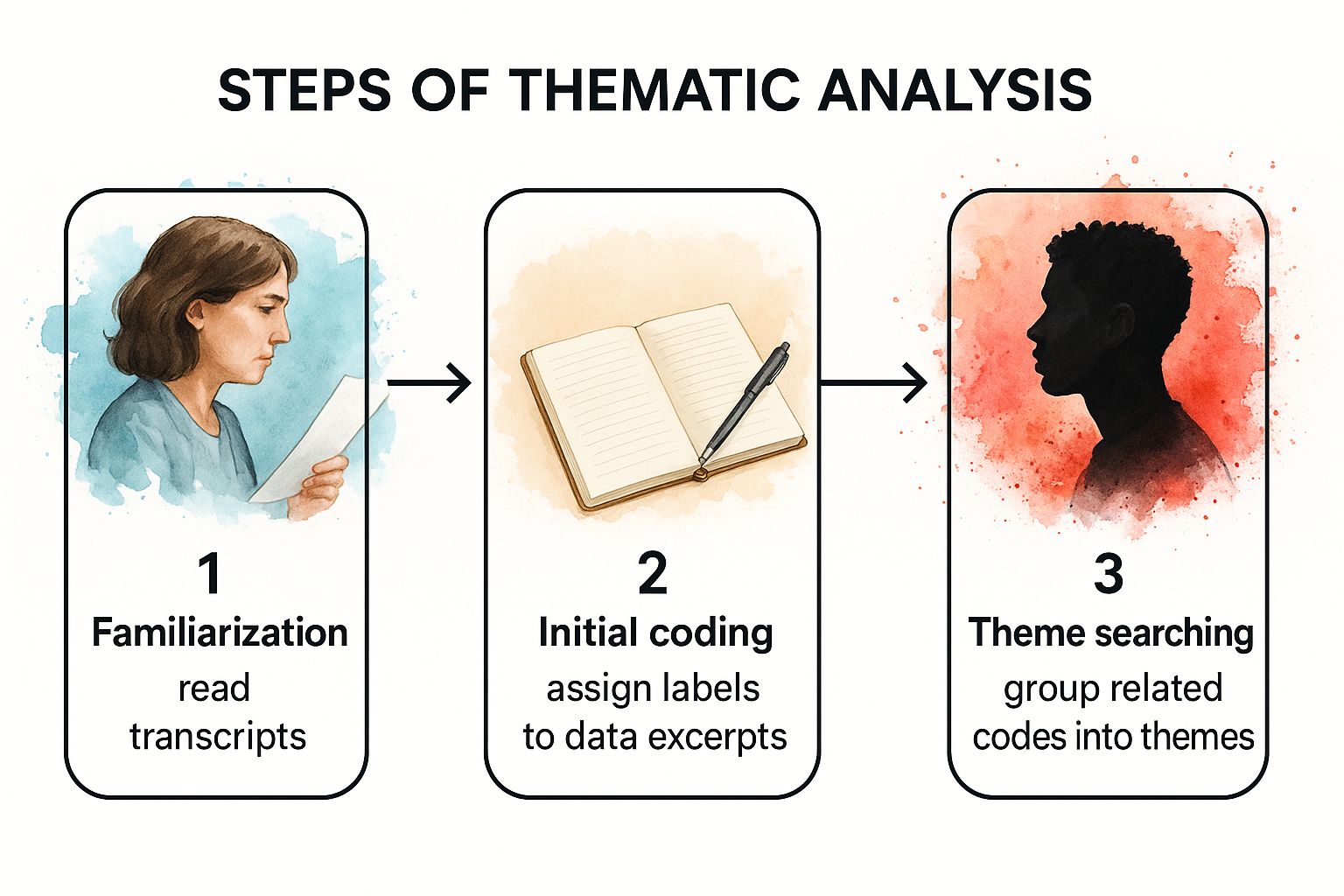

The following infographic illustrates the foundational workflow for getting started with thematic analysis, highlighting the progression from raw data to organized themes.

This visual process flow underscores how each step builds upon the last, transforming raw qualitative data into meaningful, structured insights.

Actionable Tip: To streamline the rigor of your analysis, create a "codebook" within a centralized workspace like Zemith.com. This living document defines each code and theme, providing inclusion/exclusion criteria. This practice is invaluable for ensuring consistency, especially when collaborating with other researchers, and keeps your analysis organized in one place.

For a deeper dive into the nuances of this method and other qualitative approaches, you can learn more about Thematic Analysis and related techniques to refine your research workflow.

2. Grounded Theory

Grounded theory is a systematic and rigorous qualitative research analysis technique used to develop a new theory from the data itself. Developed by sociologists Barney Glaser and Anselm Strauss, this method is uniquely inductive. Instead of starting with a hypothesis to test, the researcher builds a "grounded" theory from the ground up, based entirely on the patterns, actions, and social processes observed in the collected data.

The core principle of grounded theory is the simultaneous collection and analysis of data. Insights from early analysis shape subsequent data collection through a process called theoretical sampling, continuing until no new conceptual insights emerge, a point known as "theoretical saturation."

When to Use Grounded Theory

This methodology is exceptionally well-suited for investigating social processes, interactions, and experiences where little is known or existing theories are inadequate. It is ideal for generating a comprehensive explanation of a phenomenon that is deeply rooted in real-world contexts.

- Developing New Theories: When you want to explain a process or phenomenon for which no sufficient theory exists, such as exploring the stages of professional identity development in a new field.

- Understanding Social Processes: It is perfect for mapping out how people navigate complex situations, like the original Glaser and Strauss study on "Awareness of Dying" in hospitals.

- Explaining Change: Researchers use it to build theories on how individuals or organizations manage change, such as understanding the process of addiction recovery.

How Grounded Theory Works: A Step-by-Step Overview

Grounded theory involves a distinct, iterative workflow that moves from granular data to abstract theoretical concepts. The process typically begins with open coding, where the researcher breaks down the data into discrete parts and labels them with codes. This is followed by axial coding to draw connections between codes, and finally, selective coding to integrate these concepts into a core theoretical framework.

The constant comparison of data with emerging codes and categories is the engine that drives the analysis, ensuring the final theory is truly representative of the participants' experiences. This iterative cycle of data collection and analysis is central to its methodology.

Actionable Tip: Throughout your analysis, practice extensive "memo-writing." Memos are written records of your analytical thoughts, interpretations, and emerging theoretical ideas. They serve as a crucial bridge between your raw data and the final theory. Using a tool like Zemith.com allows you to link these memos directly to data excerpts, creating a traceable audit trail of your conceptual leaps.

To effectively manage the complex, iterative process of coding and memoing inherent in grounded theory, a powerful tool is essential. You can learn more about how Zemith enhances qualitative analysis by providing a structured environment for these intricate workflows.

3. Interpretative Phenomenological Analysis (IPA)

Interpretative Phenomenological Analysis (IPA) is a detailed and experiential qualitative research analysis technique focused on understanding how individuals make sense of their significant life experiences. Popularized by Jonathan Smith, Paul Flowers, and Mike Larkin, IPA delves deeply into the personal, lived reality of participants. Its core principle is the "double hermeneutic," where the researcher actively interprets the participant's own interpretation of their experience, providing a rich, in-depth account of a particular phenomenon from a specific individual's perspective.

The goal of IPA is not to produce broad generalizations but to offer a nuanced, contextualized understanding of a small number of cases. This idiographic focus allows for a powerful exploration of the meanings people attach to events, making it a uniquely insightful method for examining deeply personal topics.

When to Use IPA

This approach is best suited for research that seeks to understand the subjective experience of a major life event or condition. It’s ideal for research questions like "What is it like for first-time mothers to experience post-natal anxiety?" or "How do long-term survivors of a chronic illness perceive their quality of life?"

- Exploring Lived Experiences: When the research aims to understand the essence and meaning of a phenomenon, such as living with a chronic illness or navigating a significant career change.

- Small, Homogeneous Samples: IPA works best with small, purposive samples (typically 3-6 participants) who share a specific experience, allowing for a deep rather than broad analysis.

- Developing Rich Participant Narratives: It is perfect for studies where the personal story and individual perspective are paramount, providing a voice to participants' unique journeys.

How IPA Works: A Step-by-Step Overview

The IPA process is iterative and highly intensive, involving a detailed case-by-case analysis before comparing across cases. The researcher engages in a cycle of reading, reflecting, and interpreting to move from the participant's raw words to profound psychological insights.

The initial steps involve deep immersion in each individual transcript, identifying emergent themes for one case before moving to the next. This ensures each participant's voice is fully explored in its own context before any cross-case analysis begins. This meticulous approach is what distinguishes IPA as one of the most in-depth qualitative research analysis techniques.

Actionable Tip: Maintain a reflective journal throughout the analysis process. Document your assumptions, initial thoughts, and developing interpretations as you engage with each transcript. This practice, known as "bracketing," enhances the transparency of your findings. A tool like Zemith provides a dedicated space to keep these journals alongside your transcripts, ensuring your reflections are always connected to the source data.

For researchers seeking to manage the complex, iterative nature of IPA and other qualitative methods, tools like Zemith can help organize transcripts, memos, and interpretive notes in a structured knowledge base, ensuring no insight is lost.

4. Content Analysis

Content analysis is a systematic and objective qualitative research analysis technique used to make replicable and valid inferences from texts and other meaningful media. It involves identifying the presence of certain words, concepts, themes, or patterns within qualitative data. Pioneered by figures like Bernard Berelson, this method allows researchers to quantify and analyze the presence, meanings, and relationships of these concepts. It bridges qualitative and quantitative approaches by often counting frequencies alongside interpreting contextual meaning.

The core of content analysis lies in its structured process of creating a coding scheme, categorizing content according to these rules, and then examining the results. This makes it a powerful tool for systematically understanding large volumes of communication, from historical documents to modern social media posts.

When to Use Content Analysis

This method is exceptionally useful when you need to analyze documented communication in a methodical way. It's ideal for research questions focused on understanding communication trends, media representation, or public discourse, such as "How has news coverage of climate change evolved over the last decade?" or "What are the dominant themes in customer feedback on our new product?"

- Media Studies: To analyze news articles, advertisements, or TV programs for bias, representation, or recurring themes.

- Social Media Monitoring: For tracking brand sentiment, identifying emerging trends, or understanding public opinion on platforms like Twitter and Facebook.

- Policy and Document Analysis: To systematically review legal documents, political speeches, or corporate reports for specific language or policy commitments.

- Educational Research: For examining textbooks and curricula for inclusivity, bias, or the emphasis placed on certain topics.

How Content Analysis Works: A Step-by-Step Overview

Content analysis involves a methodical workflow that turns unstructured text into structured data. The process begins with defining what you will analyze (the unit of analysis) and how you will categorize it (the coding scheme). This systematic approach ensures the findings are reliable and can be replicated by other researchers. The goal is to identify both manifest content (what is explicitly stated) and latent content (the underlying, implicit meaning).

To see how content analysis is applied in a digital context, particularly for understanding online communication, explore various strategies for social media content analysis.

Actionable Tip: To ensure objectivity and consistency, develop a detailed coding manual with clear operational definitions for each category. Use at least two independent coders and measure their inter-rater reliability. A platform like Zemith.com can facilitate this by allowing multiple users to code the same documents within a shared project, making it easier to compare results and calculate reliability.

For researchers dealing with large datasets, effective organization is critical. You can learn more about systematic research data management to ensure your content analysis projects are well-structured and efficient.

5. Narrative Analysis

Narrative analysis is a powerful qualitative research analysis technique that focuses on the stories people tell. It moves beyond simply extracting information to examine how individuals construct and use narratives to make sense of their experiences, identities, and the world around them. Popularized by figures like Jerome Bruner and Catherine Kohler Riessman, this method analyzes the structure, content, and function of stories, revealing deep insights into human perspective.

At its core, narrative analysis treats personal stories as a rich data source. Researchers examine plot, characters, setting, and turning points not just for what they say, but for what they reveal about the storyteller’s values, beliefs, and cultural context. It’s about understanding the meaning people create through the act of storytelling itself.

When to Use Narrative Analysis

This method is uniquely suited for research that aims to understand individual experiences in-depth, exploring how events unfold over time from a personal viewpoint. It is ideal for research questions focused on identity, personal transformation, and sense-making.

- Exploring Lived Experiences: It is perfect for capturing the richness of life histories, such as in illness narratives in healthcare, career transition stories in organizational psychology, or trauma and recovery accounts in counseling research.

- Understanding Identity Construction: When you want to analyze how people build and present their identities through storytelling, making it valuable for studies in sociology, psychology, and cultural studies.

- Case Study Research: It provides a compelling framework for a deep, holistic analysis of a single individual's or a small group's experience over time.

How Narrative Analysis Works: A Step-by-Step Overview

The process of narrative analysis involves immersing yourself in the stories shared by participants and deconstructing them to understand their underlying structure and meaning. The goal is to see the world from the narrator's perspective and analyze how their story functions.

The workflow typically involves identifying core narrative elements, analyzing their sequence, and interpreting their broader significance. This approach prioritizes the coherence and context of the story over fragmenting data into isolated codes, as is common in other methods.

Actionable Tip: When analyzing narratives, pay close attention to the "narrative arc." Identify the beginning (initial situation), middle (turning point), and end (resolution). Use a tool like Zemith’s Smart Notepad to map these structural components for each story, making it easier to compare narrative structures across participants and uncover shared patterns in how they make sense of their experiences.

For a comprehensive understanding of how to situate your findings within the broader academic conversation, you can explore best practices for crafting a narrative and literature review that effectively contextualizes your story-based research.

6. Discourse Analysis

Discourse analysis is a powerful qualitative research analysis technique that moves beyond the literal meaning of words to examine how language functions within social contexts. It explores how language is used to construct identities, social realities, and power dynamics. Influenced by thinkers like Michel Foucault and Norman Fairclough, this method treats language not just as a tool for communication, but as a form of social action that shapes our world.

The core of discourse analysis is to scrutinize texts, conversations, or other forms of communication to understand the underlying assumptions, ideologies, and power structures at play. It answers questions about how certain topics are talked about, who has the authority to speak, and what social effects this language use produces.

When to Use Discourse Analysis

This method is particularly useful when your research aims to uncover the social and political dimensions of language. It is ideal for investigating how communication shapes beliefs, norms, and social structures, going deeper than what is explicitly stated.

- Analyzing Power Relations: It is highly effective for examining how language is used in political speeches, media reporting, or organizational policies to maintain or challenge existing power dynamics.

- Understanding Social Constructs: It's perfect for research questions exploring how concepts like gender, illness, or national identity are constructed and reinforced through everyday talk and media.

- Investigating Ideology: Use it to deconstruct the ideological underpinnings of texts, such as how advertisements create consumer desire or how news outlets frame complex issues like climate change.

How Discourse Analysis Works: A Step-by-Step Overview

While there are many approaches to discourse analysis, the process generally involves moving from a close reading of specific linguistic features to an interpretation of their broader social significance. The goal is to connect the "micro" details of language use with the "macro" social context.

The initial stages involve selecting appropriate materials, identifying linguistic patterns (like metaphors, rhetoric, and tone), and analyzing how these patterns function to create specific meanings or effects. This workflow transforms a simple text into a rich source of insight about social reality.

Actionable Tip: Pay close attention to what is not said. Silences, omissions, and unspoken assumptions can be just as revealing as the words themselves. Create a specific code or tag in your analysis software for "absences" or "omissions" to systematically track where certain voices or topics are missing, helping you uncover dominant ideologies.

For researchers looking to manage complex textual data and connect micro-level linguistic details to macro-level social themes, a robust tool is essential. You can explore how platforms like Zemith help organize and link intricate datasets, making sophisticated analysis more manageable.

7. Framework Analysis

Framework Analysis is a systematic and highly structured qualitative research analysis technique developed for applied policy research. Popularized by Jane Ritchie and Liz Spencer, it offers a pragmatic, matrix-based approach to managing and analyzing large qualitative datasets. Its key strength lies in its ability to systematically compare data across cases while retaining the rich context of each individual account.

This method involves a clear, staged process: familiarization, identifying a thematic framework, indexing, charting, and finally, mapping and interpretation. The "charting" stage, where data is summarized and entered into a matrix, is the distinctive feature of this approach. This matrix has rows for cases (e.g., individual participants) and columns for codes or themes, providing a clear visual overview of the entire dataset.

When to Use Framework Analysis

This approach is particularly valuable when research objectives are clearly defined from the outset and when you need to generate findings that can directly inform policy or practice. It is exceptionally well-suited for large-scale studies, especially those involving multiple researchers or different sites.

- Applied Policy Research: When the goal is to produce specific recommendations for policy, such as in healthcare or social work, by comparing experiences across a specific demographic.

- Team-Based Projects: The systematic, matrix-based structure makes it easier for multiple researchers to work on the same dataset consistently.

- Large Datasets: It is an excellent tool for managing and summarizing large volumes of qualitative data without losing the nuances of individual cases.

How Framework Analysis Works: A Step-by-Step Overview

The core of Framework Analysis is its structured process that moves from raw data to an organized, interpretable matrix. The process ensures that the analysis is transparent, rigorous, and directly linked to the research questions. A simplified workflow involves creating a thematic framework from initial readings, applying this framework to index the data, and then charting the indexed data into a summary matrix for comparison and interpretation.

This methodical progression ensures that findings are grounded in the participants' original accounts while allowing for systematic, cross-case analysis. It balances the need for deep, contextual understanding with the practical requirement of producing clear, actionable conclusions.

Actionable Tip: When charting data into the matrix, use a mix of direct quotes and concise summaries. Platforms like Zemith.com can accelerate this by allowing you to quickly query documents for relevant quotes and synthesize summaries with AI assistance, ensuring you maintain a direct link back to the source material while building an efficient, scannable matrix.

For researchers looking to implement this method efficiently, tools designed for structured analysis are invaluable. Exploring how Zemith can support systematic data management and charting will enhance the rigor and speed of your Framework Analysis.

Qualitative Analysis Techniques Comparison

| Method | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Thematic Analysis | Moderate - Six-phase coding process | Moderate - Time-consuming for large data | Pattern identification across datasets | Exploratory research, pattern detection | Flexible, accessible, rich data description |

| Grounded Theory | High - Complex coding (open, axial, selective) | High - Long duration, expert skills needed | New theory generation, substantive theory | Theory development from data | Systematic, rigorous, data-grounded theory |

| Interpretative Phenomenological Analysis (IPA) | High - Deep idiographic, double hermeneutic | Moderate - Small samples, interpretive skills | Rich insights into lived experience | Understanding individual experiences | Deep, nuanced, respects uniqueness |

| Content Analysis | Moderate - Coding scheme development | Moderate to High - Can handle large data | Quantified or interpreted content | Media, communication studies, document analysis | Systematic, replicable, handles large data |

| Narrative Analysis | High - Requires nuanced narrative skill | Moderate - Focus on stories and contexts | Understanding stories, identity, transitions | Life histories, identity studies | Captures complexity; respects meaning-making |

| Discourse Analysis | High - Theoretical depth, interpretive | High - Requires expert knowledge | Reveal power, ideology in language | Social/political discourse, power analysis | Uncovers hidden power structures |

| Framework Analysis | Moderate to High - Matrix-based stages | Moderate - Team collaboration, software aided | Systematic, transparent thematic matrix | Applied policy, multi-site, team-based research | Systematic, transparent, supports teams |

Choosing Your Method and Streamlining Your Workflow with AI

The journey through the landscape of qualitative research analysis techniques reveals a powerful truth: there is no single "best" method. The right choice is entirely context-dependent, shaped by your specific research question, the nature of your data, and your philosophical approach. Each technique offers a unique lens for interpretation.

Whether you are identifying recurring patterns with Thematic Analysis, building a new theory from the ground up with Grounded Theory, or deconstructing social narratives with Discourse Analysis, the goal remains the same: to transform raw data into meaningful, rigorous insight. The challenge has always been the immense manual effort and time investment these methods demand. This is where modern tools are fundamentally changing the game.

From Manual Sifting to AI-Powered Synthesis

The meticulous process of coding, categorizing, and interpreting qualitative data is where researchers spend the bulk of their time. While the human element of critical thinking is irreplaceable, the administrative and organizational burden can be overwhelming. This is precisely where AI-driven platforms like Zemith act as a powerful research assistant, streamlining the workflow without compromising analytical depth.

Instead of wrestling with scattered documents and spreadsheets, you can leverage Zemith's AI to handle the heavy lifting of initial data processing. Imagine a tool that can instantly summarize lengthy interview transcripts, pull out key quotes based on your queries, and suggest preliminary thematic clusters. This frees you up to focus on the higher-level work of interpretation, connection-making, and narrative construction, which is the true heart of qualitative analysis. By automating the tedious parts of the process, you not only accelerate your timeline but also create more mental space for the deep, reflective thinking that leads to groundbreaking discoveries. For those new to this integration, a foundational guide to understanding AI technology can be incredibly beneficial in demystifying how these tools work and how to best apply them to your research.

Actionable Next Steps for Your Qualitative Journey

Mastering these qualitative research analysis techniques is an ongoing process of learning and application. As you move forward, consider these steps to solidify your skills and enhance your workflow:

- Align Your Method and Question: Before writing a single code, revisit your core research question. Ask yourself: "Which of these methods (Thematic, Grounded Theory, IPA, etc.) provides the most direct and insightful path to answering this specific question?" This initial alignment is the single most important factor for a successful analysis.

- Conduct a Pilot Analysis: Select a small, representative sample of your data (e.g., one interview transcript, a few pages of field notes) and apply your chosen technique. This small-scale trial run helps you refine your coding framework and identify potential challenges before you commit to analyzing the entire dataset.

- Integrate a Centralized Workspace: The era of juggling multiple apps for notes, transcripts, and analysis is over. Adopt a unified platform like Zemith to centralize your entire research project. Use its Document Assistant to query your data and the Smart Notepad to draft your findings, creating a seamless and efficient workflow.

- Embrace Iteration: Qualitative analysis is not a linear path; it is cyclical and iterative. Continuously revisit your codes, themes, and interpretations as you immerse yourself deeper into the data. Your understanding will evolve, and your analysis should reflect that dynamic process.

Ultimately, the power of qualitative research lies in its ability to uncover the rich, nuanced, and complex tapestry of human experience. By thoughtfully selecting your analytical technique and leveraging the power of AI to manage your workflow, you position yourself to not only find answers but to tell compelling, data-driven stories that resonate and inspire action.

Ready to transform your research process from scattered and slow to streamlined and insightful? Discover how Zemith integrates powerful AI into a unified workspace, helping you analyze documents, draft findings, and uncover deep insights faster than ever before. Explore Zemith and elevate your qualitative analysis today.

Explore Zemith Features

Introducing Zemith

The best tools in one place, so you can quickly leverage the best tools for your needs.

All in One AI Platform

Go beyond AI Chat, with Search, Notes, Image Generation, and more.

Cost Savings

Access latest AI models and tools at a fraction of the cost.

Get Sh*t Done

Speed up your work with productivity, work and creative assistants.

Constant Updates

Receive constant updates with new features and improvements to enhance your experience.

Features

Selection of Leading AI Models

Access multiple advanced AI models in one place - featuring Gemini-2.5 Pro, Claude 4.5 Sonnet, GPT 5, and more to tackle any tasks

Speed run your documents

Upload documents to your Zemith library and transform them with AI-powered chat, podcast generation, summaries, and more

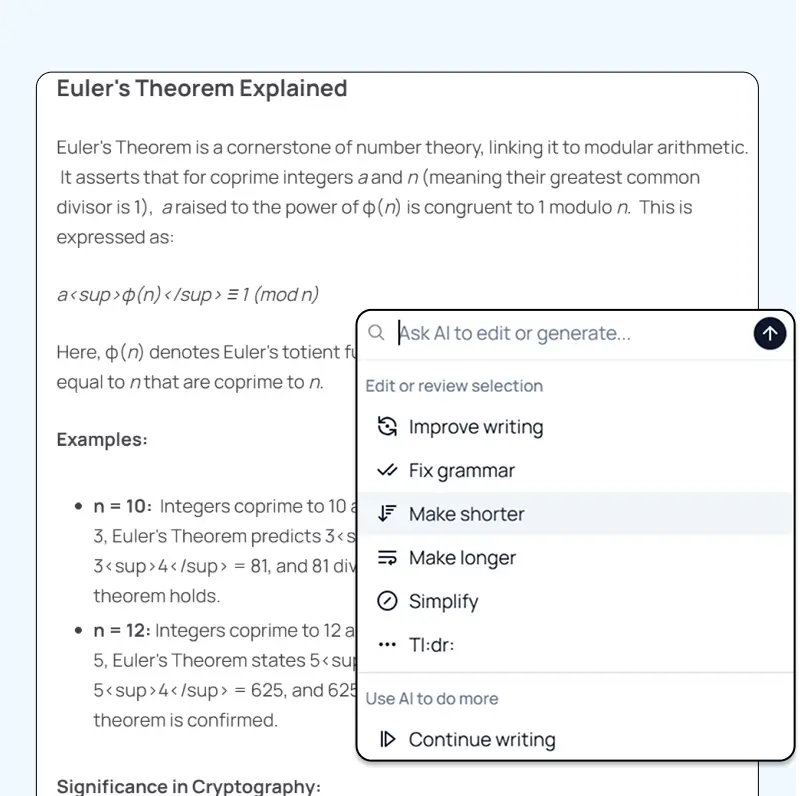

Transform Your Writing Process

Elevate your notes and documents with AI-powered assistance that helps you write faster, better, and with less effort

Unleash Your Visual Creativity

Transform ideas into stunning visuals with powerful AI image generation and editing tools that bring your creative vision to life

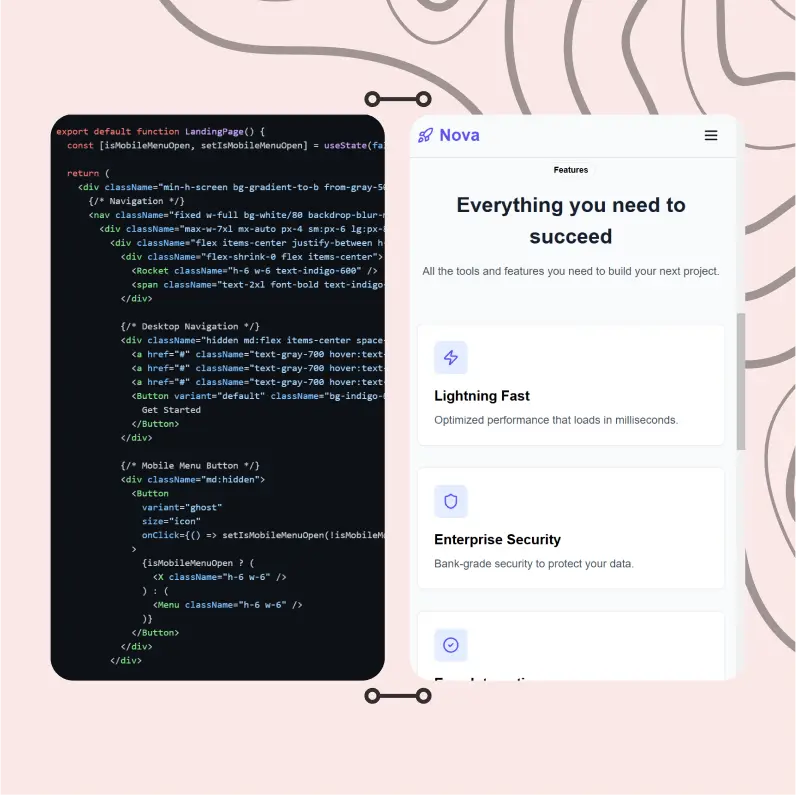

Accelerate Your Development Workflow

Boost productivity with an AI coding companion that helps you write, debug, and optimize code across multiple programming languages

Powerful Tools for Everyday Excellence

Streamline your workflow with our collection of specialized AI tools designed to solve common challenges and boost your productivity

Live Mode for Real Time Conversations

Speak naturally, share your screen and chat in realtime with AI

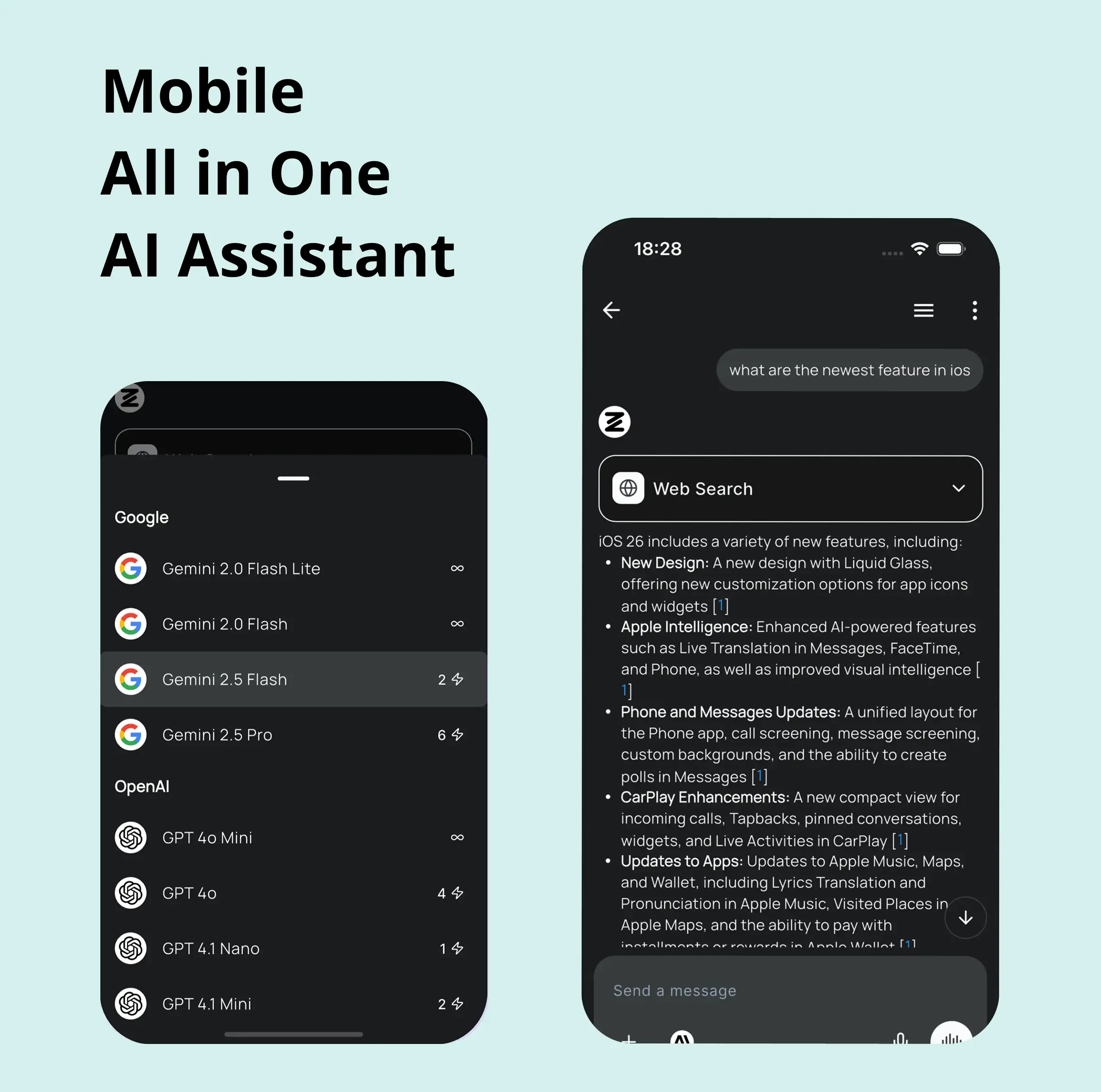

AI in your pocket

Experience the full power of Zemith AI platform wherever you go. Chat with AI, generate content, and boost your productivity from your mobile device.

Deeply Integrated with Top AI Models

Beyond basic AI chat - deeply integrated tools and productivity-focused OS for maximum efficiency

Straightforward, affordable pricing

Save hours of work and research

Affordable plan for power users

Plus

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

Professional

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

- 10000 Credits Monthly

- Access to plus features

- Access to Plus Models

- Access to tools such as web search, canvas usage, deep research tool

- Access to Creative Features

- Access to Documents Library Features

- Upload up to 50 sources per library folder

- Access to Custom System Prompt

- Access to FocusOS up to 15 tabs

- Unlimited model usage for Gemini 2.5 Flash Lite

- Set Default Model

- Access to Max Mode

- Access to Document to Podcast

- Access to Document to Quiz Generator

- Access to on demand credits

- Access to latest features

- Everything in Plus, and:

- 21000 Credits Monthly

- Access to Pro Models

- Access to Pro Features

- Access to Video Generation

- Unlimited model usage for GPT 5 Mini

- Access to code interpreter agent

- Access to auto tools

What Our Users Say

Great Tool after 2 months usage

simplyzubair

I love the way multiple tools they integrated in one platform. So far it is going in right dorection adding more tools.

Best in Kind!

barefootmedicine

This is another game-change. have used software that kind of offers similar features, but the quality of the data I'm getting back and the sheer speed of the responses is outstanding. I use this app ...

simply awesome

MarianZ

I just tried it - didnt wanna stay with it, because there is so much like that out there. But it convinced me, because: - the discord-channel is very response and fast - the number of models are quite...

A Surprisingly Comprehensive and Engaging Experience

bruno.battocletti

Zemith is not just another app; it's a surprisingly comprehensive platform that feels like a toolbox filled with unexpected delights. From the moment you launch it, you're greeted with a clean and int...

Great for Document Analysis

yerch82

Just works. Simple to use and great for working with documents and make summaries. Money well spend in my opinion.

Great AI site with lots of features and accessible llm's

sumore

what I find most useful in this site is the organization of the features. it's better that all the other site I have so far and even better than chatgpt themselves.

Excellent Tool

AlphaLeaf

Zemith claims to be an all-in-one platform, and after using it, I can confirm that it lives up to that claim. It not only has all the necessary functions, but the UI is also well-designed and very eas...

A well-rounded platform with solid LLMs, extra functionality

SlothMachine

Hey team Zemith! First off: I don't often write these reviews. I should do better, especially with tools that really put their heart and soul into their platform.

This is the best tool I've ever used. Updates are made almost daily, and the feedback process is very fast.

reu0691

This is the best AI tool I've used so far. Updates are made almost daily, and the feedback process is incredibly fast. Just looking at the changelogs, you can see how consistently the developers have ...